Imaginary time: Difference between revisions

en>Maurice Carbonaro m →In cosmology: Hyperlinked "(...) singularity (...)" with the "Gravitational singularity". Please feel free to undo this change... motivating, if possible, your decision. Thanks. |

en>Michael Hardy Some math notation edits. I'm restoring the en-dash in "Hartle–Hawking" and will see if there's a problem. |

||

| Line 1: | Line 1: | ||

The | {{lead too short|date=February 2013}} | ||

'''Robust statistics''' are [[statistics]] with good performance for data drawn from a wide range of [[probability distribution]]s, especially for distributions that are not [[normal distribution|normally distributed]]. Robust [[Statistics|statistical]] methods have been developed for many common problems, such as estimating [[location parameter|location]], [[scale parameter|scale]] and [[regression coefficient|regression parameters]]. One motivation is to produce [[statistical method]]s that are not unduly affected by [[outlier]]s. Another motivation is to provide methods with good performance when there are small departures from parametric distributions. For example, robust methods work well for mixtures of two normal distributions with different standard-deviations, for example, one and three; under this model, non-robust methods like a t-test work badly. | |||

== Introduction == | |||

Robust statistics seeks to provide methods that emulate popular statistical methods, but which are not unduly affected by [[outliers]] or other small departures from model assumptions. In [[statistics]], classical estimation methods rely heavily on assumptions which are often not met in practice. In particular, it is often assumed that the data errors are normally distributed, at least approximately, or that the [[central limit theorem]] can be relied on to produce normally distributed estimates. Unfortunately, when there are outliers in the data, classical [[estimator]]s often have very poor performance, when judged using the ''[[#Breakdown_point|breakdown point]]'' and the ''[[#Influence_function_and_sensitivity_curve|influence function]]'', described below. | |||

The practical effect of problems seen in the influence function can be studied empirically by examining the [[sampling distribution]] of proposed estimators under a [[mixture model]], where one mixes in a small amount (1–5% is often sufficient) of contamination. For instance, one may use a mixture of 95% a normal distribution, and 5% a normal distribution with the same mean but significantly higher standard deviation (representing outliers). | |||

Robust [[parametric statistics]] can proceed in two ways: | |||

*by designing estimators so that a pre-selected behaviour of the influence function is achieved | |||

*by replacing estimators that are optimal under the assumption of a normal distribution with estimators that are optimal for, or at least derived for, other distributions: for example using the [[t-distribution]] with low degrees of freedom (high kurtosis; degrees of freedom between 4 and 6 have often been found to be useful in practice {{Citation needed|date=February 2008}}) or with a [[Mixture density|mixture]] of two or more distributions. | |||

Robust estimates have been studied for the following problems: | |||

:estimating [[location parameter]]s | |||

:estimating [[scale parameter]]s | |||

:estimating [[regression coefficient]]s | |||

:estimation of model-states in models expressed in [[State space (controls)|state-space]] form, for which the standard method is equivalent to a [[Kalman filter]]. | |||

== Examples == | |||

* The [[median]] is a robust measure of [[central tendency]], while the [[Arithmetic mean|mean]] is not; for instance, the median has a [[breakdown point]] of 50%, while the mean has a breakdown point of 0% (a single large sample can throw it off). | |||

* The [[median absolute deviation]] and [[interquartile range]] are robust measures of [[statistical dispersion]], while the [[standard deviation]] and [[range (statistics)|range]] are not. | |||

[[Trimmed estimator]]s and [[Winsorising|Winsorised estimators]] are general methods to make statistics more robust. [[L-estimator]]s are a general class of simple statistics, often robust, while [[#M-estimators|M-estimators]] are a general class of robust statistics, and are now the preferred solution, though they can be quite involved to calculate. | |||

== Definition == | |||

{{Expand section|date=July 2008}} | |||

There are various definitions of a "robust statistic." Strictly speaking, a '''robust statistic''' is resistant to errors in the results, produced by deviations from assumptions<ref name="huber">''Robust Statistics'', [[Peter. J. Huber]], Wiley, 1981 (republished in paperback, 2004), page 1.</ref> (e.g., of normality). This means that if the assumptions are only approximately met, the '''robust estimator''' will still have a reasonable [[efficiency (statistics)|efficiency]], and reasonably small [[bias (statistics)|bias]], as well as being [[asymptotically unbiased]], meaning having a bias tending towards 0 as the sample size tends towards infinity. | |||

One of the most important cases is distributional robustness.<ref name="huber"/> Classical statistical procedures are typically sensitive to "longtailedness" (e.g., when the distribution of the data has longer tails than the assumed normal distribution). Thus, in the context of robust statistics, ''distributionally robust'' and ''outlier-resistant'' are effectively synonymous.<ref name="huber"/> For one perspective on research in robust statistics up to 2000, see Portnoy and He (2000). | |||

A related topic is that of [[resistant statistics]], which are resistant to the effect of extreme scores. | |||

== Example: speed of light data == | |||

Gelman et al. in Bayesian Data Analysis (2004) consider a data set relating to speed of light measurements made by [[Simon Newcomb]]. The data sets for that book can be found via the [[Classic data sets]] page, and the book's website contains more information on the data. | |||

Although the bulk of the data look to be more or less normally distributed, there are two obvious outliers. These outliers have a large effect on the mean, dragging it towards them, and away from the center of the bulk of the data. Thus, if the mean is intended as a measure of the location of the center of the data, it is, in a sense, biased when outliers are present. | |||

Also, the distribution of the mean is known to be asymptotically normal due to the central limit theorem. However, outliers can make the distribution of the mean non-normal even for fairly large data sets. Besides this non-normality, the mean is also [[Efficiency (statistics)|inefficient]] in the presence of outliers and less variable measures of location are available. | |||

=== Estimation of location === | |||

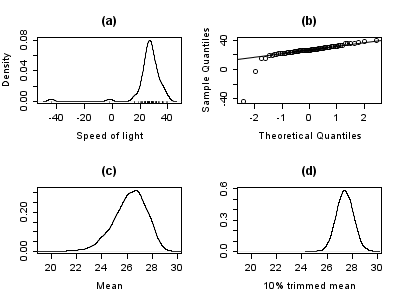

The plot below shows a density plot of the speed of light data, together with a rug plot (panel (a)). Also shown is a normal [[Q–Q plot]] (panel (b)). The outliers are clearly visible in these plots. | |||

Panels (c) and (d) of the plot show the bootstrap distribution of the mean (c) and the 10% [[trimmed mean]] (d). The trimmed mean is a simple robust estimator of location that deletes a certain percentage of observations (10% here) ''from each end'' of the data, then computes the mean in the usual way. The analysis was performed in [[R (programming language)|R]] and 10,000 [[bootstrapping (statistics)|bootstrap]] samples were used for each of the raw and trimmed means. | |||

The distribution of the mean is clearly much wider than that of the 10% trimmed mean (the plots are on the same scale). Also note that whereas the distribution of the trimmed mean appears to be close to normal, the distribution of the raw mean is quite skewed to the left. So, in this sample of 66 observations, only 2 outliers cause the central limit theorem to be inapplicable. | |||

[[Image:speedOfLight.png]] | |||

Robust statistical methods, of which the trimmed mean is a simple example, seek to outperform classical statistical methods in the presence of outliers, or, more generally, when underlying parametric assumptions are not quite correct. | |||

Whilst the trimmed mean performs well relative to the mean in this example, better robust estimates are available. In fact, the mean, median and trimmed mean are all special cases of [[M-estimators]]. Details appear in the sections below. | |||

=== Estimation of scale === | |||

{{Main| Robust measures of scale}} | |||

The outliers in the speed of light data have more than just an adverse effect on the mean; the usual estimate of scale is the standard deviation, and this quantity is even more badly affected by outliers because the squares of the deviations from the mean go into the calculation, so the outliers' effects are exacerbated. | |||

The plots below show the bootstrap distributions of the standard deviation, [[median absolute deviation]] (MAD) and [[Robust measures of scale#Robust measures of scale based on absolute pairwise differences|Qn estimator]] of scale (Rousseeuw and Croux, 1993). The plots are based on 10000 bootstrap samples for each estimator, with some Gaussian noise added to the resampled data ([[smoothed bootstrap]]). Panel (a) shows the distribution of the standard deviation, (b) of the MAD and (c) of Qn. | |||

[[Image:speedOfLightScale.png]] | |||

The distribution of standard deviation is erratic and wide, a result of the outliers. The MAD is better behaved, and Qn is a little bit more efficient than MAD. This simple example demonstrates that when outliers are present, the standard deviation cannot be recommended as an estimate of scale. | |||

=== Manual screening for outliers === | |||

Traditionally, statisticians would manually screen data for [[outliers]], and remove them, usually checking the source of the data to see if the outliers were erroneously recorded. Indeed, in the speed of light example above, it is easy to see and remove the two outliers prior to proceeding with any further analysis. However, in modern times, data sets often consist of large numbers of variables being measured on large numbers of experimental units. Therefore, manual screening for outliers is often impractical. | |||

Outliers can often interact in such a way that they mask each other. As a simple example, consider a small univariate data set containing one modest and one large outlier. The estimated standard deviation will be grossly inflated by the large outlier. The result is that the modest outlier looks relatively normal. As soon as the large outlier is removed, the estimated standard deviation shrinks, and the modest outlier now looks unusual. | |||

This problem of masking gets worse as the complexity of the data increases. For example, in regression problems, diagnostic plots are used to identify outliers. However, it is common that once a few outliers have been removed, others become visible. The problem is even worse in higher dimensions. | |||

Robust methods provide automatic ways of detecting, downweighting (or removing), and flagging outliers, largely removing the need for manual screening. Care must be taken; initial data showing the [[ozone hole]] first appearing over Antarctica were rejected as outliers by non-human screening<ref>When was the ozone hole discovered, ''Weather Underground''http://www.wunderground.com/climate/holefaq.asp</ref> | |||

=== Variety of applications === | |||

Although this article deals with general principles for univariate statistical methods, robust methods also exist for regression problems, generalized linear models, and parameter estimation of various distributions. | |||

== Measures of robustness == | |||

The basic tools used to describe and measure robustness are, the ''breakdown point'', the ''influence function'' and the ''sensitivity curve''. | |||

=== Breakdown point === | |||

Intuitively, the breakdown point of an [[estimator]] is the proportion of incorrect observations (e.g. arbitrarily large observations) an estimator can handle before giving an incorrect (e.g., arbitrarily large) result. For example, given <math>n</math> independent random variables <math>(X_1,\dots,X_n)\sim\mathcal{N}(0,1)</math> and the corresponding realizations <math>x_1,\dots,x_n</math>, we can use <math>\overline{X_n}:=\frac{X_1+\cdots+X_n}{n}</math> to estimate the mean. Such an estimator has a breakdown point of 0 because we can make <math>\overline{x}</math> arbitrarily large just by changing any of <math> x_1,\dots,x_n</math>. | |||

The higher the breakdown point of an estimator, the more robust it is. Intuitively, we can understand that a breakdown point cannot exceed 50% because if more than half of the observations are contaminated, it is not possible to distinguish between the underlying distribution and the contaminating distribution. Therefore, the maximum breakdown point is 0.5 and there are estimators which achieve such a breakdown point. For example, the median has a breakdown point of 0.5. The X% trimmed mean has breakdown point of X%, for the chosen level of X. Huber (1981) and Maronna et al. (2006) contain more details. The level and the power breakdown points of tests are investigated in He et al. (1990). | |||

<span id="resistant statistic"/>Statistics with high breakdown points are sometimes called '''resistant statistics.'''<ref>[http://secamlocal.ex.ac.uk/people/staff/dbs202/cag/courses/MT37C/course/node13.html Resistant statistics], [http://secamlocal.ex.ac.uk/people/staff/dbs202/ David B. Stephenson]</ref> | |||

==== Example: speed of light data ==== | |||

In the speed of light example, removing the two lowest observations causes the mean to change from 26.2 to 27.75, a change of 1.55. The estimate of scale produced by the Qn method is 6.3. We can divide this by the square root of the sample size to get a robust standard error, and we find this quantity to be 0.78. Thus, the change in the mean resulting from removing two [[outliers]] is approximately twice the robust standard error. | |||

The 10% trimmed mean for the speed of light data is 27.43. Removing the two lowest observations and recomputing gives 27.67. Clearly, the trimmed mean is less affected by the outliers and has a higher breakdown point. | |||

Notice that if we replace the lowest observation, -44, by -1000, the mean becomes 11.73, whereas the 10% trimmed mean is still 27.43. In many areas of applied statistics, it is common for data to be log-transformed to make them near symmetrical. Very small values become large negative when log-transformed, and zeroes become negatively infinite. Therefore, this example is of practical interest. | |||

=== Empirical influence function === | |||

{{Technical|section=Empirical influence function|date=June 2010}} | |||

{{Unreferenced section|date=February 2012}} | |||

[[Image:Biweight.svg|thumb|right|300px|Tukey's biweight function]] | |||

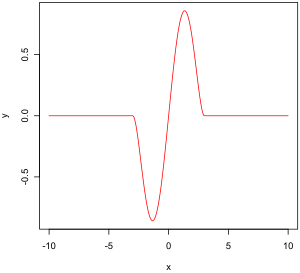

The empirical influence function is a measure of the dependence of the estimator on the value of one of the points in the sample. It is a model-free measure in the sense that it simply relies on calculating the estimator again with a different sample. On the right is Tukey's biweight function, which, as we will later see, is an example of what a "good" (in a sense defined later on) empirical influence function should look like. | |||

In mathematical terms, an influence function is defined as a vector in the space of the estimator, which is in turn defined for a sample which is a subset of the population: | |||

#<math>(\Omega,\mathcal{A},P)</math> is a probability space, | |||

#<math>(\mathcal{X},\Sigma)</math> is a measure space (state space), | |||

#<math>\Theta</math> is a [[parameter space]] of dimension <math>p\in\mathbb{N}^*</math>, | |||

#<math>(\Gamma,S)</math> is a measure space, | |||

For example, | |||

#<math>(\Omega,\mathcal{A},P)</math> is any probability space, | |||

#<math>(\mathcal{X},\Sigma)=(\mathbb{R},\mathcal{B})</math>, | |||

#<math>\Theta=\mathbb{R}\times\mathbb{R}^+</math> | |||

#<math>(\Gamma,S)=(\mathbb{R},\mathcal{B})</math>, | |||

The definition of an empirical influence function is: | |||

Let <math>n\in\mathbb{N}^*</math> and <math>X_1,\dots,X_n:(\Omega, \mathcal{A})\rightarrow(\mathcal{X},\Sigma)</math> are [[iid]] and <math>(x_1,\dots,x_n)</math> is a sample from these variables. <math>T_n:(\mathcal{X}^n,\Sigma^n)\rightarrow(\Gamma,S)</math> is an estimator. Let <math>i\in\{1,\dots,n\}</math>. The empirical influence function <math>EIF_i</math> at observation <math>i</math> is defined by: | |||

<math>EIF_i:x\in\mathcal{X}\mapsto n*(T_n(x_1,\dots,x_{i-1},x,x_{i+1},\dots,x_n)-T_n(x_1,\dots,x_{i-1},x_i,x_{i+1},\dots,x_n))</math> | |||

Note that <math> EIF_i:x \in \Gamma </math>. | |||

What this actually means is that we are replacing the i-th value in the sample by an arbitrary value and looking at the output of the estimator. Alternatively, the EIF is defined as the (scaled by n+1 instead of n) effect on the estimator of adding the point <math>x</math> to the sample.<ref>See Ollina and Koivunen http://cc.oulu.fi/~esollila/papers/ssp03fin.pdf</ref> | |||

=== Influence function and sensitivity curve === | |||

Instead of relying solely on the data, we could use the distribution of the random variables. The approach is quite different from that of the previous paragraph. What we are now trying to do is to see what happens to an estimator when we change the distribution of the data slightly: it assumes a ''distribution,'' and measures sensitivity to change in this distribution. By contrast, the empirical influence assumes a ''sample set,'' and measures sensitivity to change in the samples. | |||

Let <math>A</math> be a convex subset of the set of all finite signed measures on <math>\Sigma</math>. We want to estimate the parameter <math>\theta\in\Theta</math> of a distribution <math>F</math> in <math>A</math>. Let the functional <math>T:A\rightarrow\Gamma</math> be the asymptotic value of some estimator sequence <math>(T_n)_{n\in\mathbb{N}}</math>. We will suppose that this functional is [[Fisher consistency|Fisher consistent]], i.e. <math>\forall \theta\in\Theta, T(F_\theta)=\theta</math>. This means that at the model <math>F</math>, the estimator sequence asymptotically measures the correct quantity. | |||

Let <math>G</math> be some distribution in <math>A</math>. What happens when the data doesn't follow the model <math>F</math> exactly but another, slightly different, "going towards" <math>G</math>? | |||

We're looking at: <math>dT_{G-F}(F) = \lim_{t\rightarrow 0^+}\frac{T(tG+(1-t)F) - T(F)}{t}</math>, | |||

which is the [[one-sided limit|one-sided]] [[Gâteaux derivative|directional derivative]] of <math>T</math> at <math>F</math>, in the direction of <math>G-F</math>. | |||

Let <math>x\in\mathcal{X}</math>. <math>\Delta_x</math> is the probability measure which gives mass 1 to <math>\{x\}</math>. We choose <math>G=\Delta_x</math>. The influence function is then defined by: | |||

<math>IF(x; T; F):=\lim_{t\rightarrow 0^+}\frac{T(t\Delta_x+(1-t)F) - T(F)}{t}.</math> | |||

It describes the effect of an infinitesimal contamination at the point <math>x</math> on the estimate we are seeking, standardized by the mass <math>t</math> of the contamination (the asymptotic bias caused by contamination in the observations). For a robust estimator, we want a bounded influence function, that is, one which does not go to infinity as x becomes arbitrarily large. | |||

=== Desirable properties === | |||

Properties of an influence function which bestow it with desirable performance are: | |||

#Finite rejection point <math>\rho^*</math>, | |||

#Small gross-error sensitivity <math>\gamma^*</math>, | |||

#Small local-shift sensitivity <math>\lambda^*</math>. | |||

==== Rejection point ==== | |||

<math>\rho^*:=\inf_{r>0}\{r:IF(x;T;F)=0, |x|>r\}</math> | |||

==== Gross-error sensitivity ==== | |||

<math>\gamma^*(T;F) := \sup_{x\in\mathcal{X}}|IF(x; T ; F)|</math> | |||

==== Local-shift sensitivity ==== | |||

<math>\lambda^*(T;F) := \sup_{(x,y)\in\mathcal{X}^2\atop x\neq y}\left\|\frac{IF(y ; T; F) - IF(x; T ; F)}{y-x}\right\|</math> | |||

This value, which looks a lot like a [[Lipschitz constant]], represents the effect of shifting an observation slightly from <math>x</math> to a neighbouring point <math>y</math>, i.e., add an observation at <math>y</math> and remove one at <math>x</math>. | |||

== M-estimators == | |||

{{Main|M-estimator}} | |||

''(The mathematical context of this paragraph is given in the section on empirical influence functions.)'' | |||

Historically, several approaches to robust estimation were proposed, including R-estimators and [[L-estimator]]s. However, M-estimators now appear to dominate the field as a result of their generality, high breakdown point, and their efficiency. See Huber (1981). | |||

M-estimators are a generalization of [[maximum likelihood estimator]]s (MLEs). What we try to do with MLE's is to maximize <math>\prod_{i=1}^n f(x_i)</math> or, equivalently, minimize <math>\sum_{i=1}^n-\log f(x_i)</math>. In 1964, Huber proposed to generalize this to the minimization of <math>\sum_{i=1}^n \rho(x_i)</math>, where <math>\rho</math> is some function. MLE are therefore a special case of M-estimators (hence the name: "''M''aximum likelihood type" estimators). | |||

Minimizing <math>\sum_{i=1}^n \rho(x_i)</math> can often be done by differentiating <math>\rho</math> and solving <math>\sum_{i=1}^n \psi(x_i) = 0</math>, where <math>\psi(x) = \frac{d\rho(x)}{dx}</math> (if <math>\rho</math> has a derivative). | |||

Several choices of <math>\rho</math> and <math>\psi</math> have been proposed. The two figures below show four <math>\rho</math> functions and their corresponding <math>\psi</math> functions. | |||

[[Image:RhoFunctions.png]] | |||

For squared errors, <math>\rho(x)</math> increases at an accelerating rate, whilst for absolute errors, it increases at a constant rate. When Winsorizing is used, a mixture of these two effects is introduced: for small values of x, <math>\rho</math> increases at the squared rate, but once the chosen threshold is reached (1.5 in this example), the rate of increase becomes constant. This Winsorised estimator is also known as the [[Huber loss function]]. | |||

Tukey's biweight (also known as bisquare) function behaves in a similar way to the squared error function at first, but for larger errors, the function tapers off. | |||

[[Image:PsiFunctions.png]] | |||

=== Properties of M-estimators === | |||

Notice that M-estimators do not necessarily relate to a probability density function. Therefore, off-the-shelf approaches to inference that arise from likelihood theory can not, in general, be used. | |||

It can be shown that M-estimators are asymptotically normally distributed, so that as long as their standard errors can be computed, an approximate approach to inference is available. | |||

Since M-estimators are normal only asymptotically, for small sample sizes it might be appropriate to use an alternative approach to inference, such as the bootstrap. However, M-estimates are not necessarily unique (i.e., there might be more than one solution that satisfies the equations). Also, it is possible that any particular bootstrap sample can contain more outliers than the estimator's breakdown point. Therefore, some care is needed when designing bootstrap schemes. | |||

Of course, as we saw with the speed of light example, the mean is only normally distributed asymptotically and when outliers are present the approximation can be very poor even for quite large samples. However, classical statistical tests, including those based on the mean, are typically bounded above by the nominal size of the test. The same is not true of M-estimators and the type I error rate can be substantially above the nominal level. | |||

These considerations do not "invalidate" M-estimation in any way. They merely make clear that some care is needed in their use, as is true of any other method of estimation. | |||

=== Influence function of an M-estimator === | |||

It can be shown that the influence function of an M-estimator <math>T</math> is proportional to <math>\psi</math> (see Huber, 1981 (and 2004), page 45), which means we can derive the properties of such an estimator (such as its rejection point, gross-error sensitivity or local-shift sensitivity) when we know its <math>\psi</math> function. | |||

<math>IF(x;T,F) = M^{-1}\psi(x,T(F))</math> | |||

with the <math>p\times p</math> given by: | |||

<math>M = -\int_{\mathcal{X}}\left(\frac{\partial \psi(x,\theta)}{\partial \theta}\right)_{T(F)}dF(x)</math>. | |||

=== Choice of <math>\psi</math> and <math>\rho</math> === | |||

In many practical situations, the choice of the <math>\psi</math> function is not critical to gaining a good robust estimate, and many choices will give similar results that offer great improvements, in terms of efficiency and bias, over classical estimates in the presence of outliers (Huber, 1981). | |||

Theoretically, <math>\psi</math> functions are to be preferred, and Tukey's biweight (also known as bisquare) function is a popular choice. Maronna et al. (2006) recommend the biweight function with efficiency at the normal set to 85%. | |||

== Robust parametric approaches == | |||

M-estimators do not necessarily relate to a density function and so are not fully parametric. Fully parametric approaches to robust modeling and inference, both Bayesian and likelihood approaches, usually deal with heavy tailed distributions such as Student's ''t''-distribution. | |||

For the ''t''-distribution with <math>\nu</math> degrees of freedom, it can be shown that | |||

<math>\psi(x) = \frac{x}{x^2 + \nu}</math>. | |||

For <math>\nu=1</math>, the ''t''-distribution is equivalent to the Cauchy distribution. Notice that the degrees of freedom is sometimes known as the ''kurtosis parameter''. It is the parameter that controls how heavy the tails are. In principle, <math>\nu</math> can be estimated from the data in the same way as any other parameter. In practice, it is common for there to be multiple local maxima when <math>\nu</math> is allowed to vary. As such, it is common to fix <math>\nu</math> at a value around 4 or 6. The figure below displays the <math>\psi</math>-function for 4 different values of <math>\nu</math>. | |||

[[Image:TDistPsi.png]] | |||

=== Example: speed of light data === | |||

For the speed of light data, allowing the kurtosis parameter to vary and maximizing the likelihood, we get | |||

<math> \hat\mu = 27.40, \hat\sigma = 3.81, \hat\nu = 2.13.</math> | |||

Fixing <math>\nu = 4</math> and maximizing the likelihood gives | |||

<math>\hat\mu = 27.49, \hat\sigma = 4.51.</math> | |||

== Related concepts == | |||

A [[pivotal quantity]] is a function of data, whose underlying population distribution is a member of a parametric family, that is not dependent on the values of the parameters. An [[ancillary statistic]] is such a function that is also a statistic, meaning that it is computed in terms of the data alone. Such functions are robust to parameters in the sense that they are independent of the values of the parameters, but not robust to the model in the sense that they assume an underlying model (parametric family), and in fact such functions are often very sensitive to violations of the model assumptions. Thus [[test statistic]]s, frequently constructed in terms of these to not be sensitive to assumptions about parameters, are still very sensitive to model assumptions. | |||

== Replacing outliers and missing values == | |||

If there are relatively few missing points, there are some models which can be used to estimate values to complete the series, such as replacing missing values with the mean or median of the data. Simple linear regression can also be used to estimate missing values (MacDonald and Zucchini, 1997; Harvey, 1989). In addition, [[outliers]] can sometimes be accommodated in the data through the use of trimmed means, other scale estimators apart from standard deviation (e.g., MAD) and Winsorization (McBean and Rovers, 1998). In calculations of a trimmed mean, a fixed percentage of data is dropped from each end of an ordered data, thus eliminating the outliers. The mean is then calculated using the remaining data. [[Winsorizing]] involves accommodating an outlier by replacing it with the next highest or next smallest value as appropriate (Rustum & Adeloye, 2007).<ref>Rustum R., and A. J. Adeloye (2007); ''Replacing outliers and missing values from activated sludge data using Kohonen Self Organizing Map'', Journal of Environmental Engineering, 133 (9), 909-916.</ref> | |||

However, using these types of models to predict missing values or outliers in a long time series is difficult and often unreliable, particularly if the number of values to be in-filled is relatively high in comparison with total record length. The accuracy of the estimate depends on how good and representative the model is and how long the period of missing values extends (Rosen and Lennox, 2001). The in a case of a dynamic process, so any variable is dependent, not just on the historical time series of the same variable but also on several other variables or parameters of the process. In other words, the problem is an exercise in multivariate analysis rather than the univariate approach of most of the traditional methods of estimating missing values and outliers; a multivariate model will therefore be more representative than a univariate one for predicting missing values. The kohonin self organising map (KSOM) offers a simple and robust multivariate model for data analysis, thus providing good possibilities to estimate missing values, taking into account its relationship or correlation with other pertinent variables in the data record (Rustum & Adeloye 2007). | |||

Standard [[Kalman filter]]s are not robust to outliers. To this end Ting, Theodorou and Schaal have recently shown that a modification of Masreliez's theorem can deal with outliers.<ref>Jo-anne Ting, Evangelos Theodorou and Stefan Schaal; "''A Kalman filter for robust outlier detection''", International Conference on Intelligent Robots and Systems - IROS , pp. 1514-1519 (2007).</ref> | |||

One common approach to handle outliers in data analysis is to perform outlier detection first, followed by an efficient estimation method (e.g., the least squares). While this approach is often useful, one must keep in mind two challenges. First, an outlier deletion method that relies on a non-robust initial fit can suffer from the effect of masking, that is, a group of outliers can mask each other and escape detection (Rousseeuw and Leroy, 2007). Second, if a high breakdown initial fit is used for outlier detection, the follow-up analysis might inherit some of the inefficiencies of the initial estimator (He and Portnoy, 1992). | |||

==See also== | |||

*[[Robust confidence intervals]] | |||

*[[Robust regression]] | |||

*[[Unit-weighted regression]] | |||

==References== | |||

{{Reflist}} | |||

* ''Robust Statistics - The Approach Based on Influence Functions'', Frank R. Hampel, Elvezio M. Ronchetti, [[Peter J. Rousseeuw]] and Werner A. Stahel, Wiley, 1986 (republished in paperback, 2005) | |||

* ''Robust Statistics'', Peter. J. Huber, Wiley, 1981 (republished in paperback, 2004) | |||

* ''Robust Regression and Outlier Detection'', [[Peter J. Rousseeuw]] and Annick M. Leroy, Wiley, 1987 (republished in paperback, 2003) | |||

* {{Cite book|last1=Hettmansperger|first1=T. P.|last2=McKean|first2=J. W.|title=Robust nonparametric statistical methods| edition=First|series=Kendall's Library of Statistics|volume=5|publisher=John Wiley & Sons, Inc.|location=New York|year=1998|pages=xiv+467 pp.|isbn=0-340-54937-8 }} {{MR|1604954}} | |||

* ''Robust Statistics - Theory and Methods'', Ricardo Maronna, [[R. Douglas Martin]] and Victor Yohai, Wiley, 2006 | |||

<!-- NOT ROBUST * ''Bayesian Data Analysis'', Andrew Gelman, John B. Carlin, Hal S. Stern and Donald B. Rubin, Chapman & Hall/CRC, 2004 --> | |||

* [[Peter J. Rousseeuw|Rousseeuw, P.J.]] and Croux, C. "Alternatives to the Median Absolute Deviation," ''Journal of the American Statistical Association'' 88 (1993), 1273 | |||

*{{Citation | last1=Press | first1=WH | last2=Teukolsky | first2=SA | last3=Vetterling | first3=WT | last4=Flannery | first4=BP | year=2007 | title=Numerical Recipes: The Art of Scientific Computing | edition=3rd | publisher=Cambridge University Press | publication-place=New York | isbn=978-0-521-88068-8 | chapter=Section 15.7. Robust Estimation | chapter-url=http://apps.nrbook.com/empanel/index.html#pg=818}} | |||

* He, X. and Portnoy, S. "Reweighted LS Estimators Converge at the same Rate as the Initial Estimator," ''Annals of Statistics'' Vol. 20, No. 4 (1992), 2161–2167 | |||

* He, X., Simpson, D.G. and Portnoy, S. "Breakdown Robustness of Tests," ''Journal of the American Statistical Association'' Vol. 85, No. 40, (1990), 446-452 | |||

* Portnoy S. and He, X. "A Robust Journey in the New Millennium," ''Journal of the American Statistical Association'' Vol. 95, No. 452 (Dec., 2000), 1331–1335 | |||

* Stephen M. Stigler. "The Changing History of Robustness," ''The American Statistician'' November 1, 2010, 64(4): 277-281. {{doi|10.1198/tast.2010.10159}} | |||

* Wilcox, R. "Introduction to Robust Estimation & Hypothesis Testing," Academic Press, 201 | |||

== External links == | |||

*[[Brian D. Ripley|Brian Ripley's]] [http://www.stats.ox.ac.uk/pub/StatMeth/Robust.pdf robust statistics course notes.] | |||

*[http://www.nickfieller.staff.shef.ac.uk/sheff-only/StatModall05.pdf Nick Fieller's course notes on Statistical Modelling and Computation] contain material on robust regression. | |||

*[http://lagrange.math.siu.edu/Olive/ol-bookp.htm David Olive's site] contains course notes on robust statistics and some data sets. | |||

*[http://jsxgraph.uni-bayreuth.de/wiki/index.php/Analyze_data_with_the_Statistics_software_R Online experiments using R and JSXGraph] | |||

{{DEFAULTSORT:Robust Statistics}} | |||

[[Category:Statistical theory]] | |||

[[Category:Robust statistics| ]] | |||

[[ru:Робастность в статистике]] | |||

Revision as of 18:44, 2 October 2013

Robust statistics are statistics with good performance for data drawn from a wide range of probability distributions, especially for distributions that are not normally distributed. Robust statistical methods have been developed for many common problems, such as estimating location, scale and regression parameters. One motivation is to produce statistical methods that are not unduly affected by outliers. Another motivation is to provide methods with good performance when there are small departures from parametric distributions. For example, robust methods work well for mixtures of two normal distributions with different standard-deviations, for example, one and three; under this model, non-robust methods like a t-test work badly.

Introduction

Robust statistics seeks to provide methods that emulate popular statistical methods, but which are not unduly affected by outliers or other small departures from model assumptions. In statistics, classical estimation methods rely heavily on assumptions which are often not met in practice. In particular, it is often assumed that the data errors are normally distributed, at least approximately, or that the central limit theorem can be relied on to produce normally distributed estimates. Unfortunately, when there are outliers in the data, classical estimators often have very poor performance, when judged using the breakdown point and the influence function, described below.

The practical effect of problems seen in the influence function can be studied empirically by examining the sampling distribution of proposed estimators under a mixture model, where one mixes in a small amount (1–5% is often sufficient) of contamination. For instance, one may use a mixture of 95% a normal distribution, and 5% a normal distribution with the same mean but significantly higher standard deviation (representing outliers).

Robust parametric statistics can proceed in two ways:

- by designing estimators so that a pre-selected behaviour of the influence function is achieved

- by replacing estimators that are optimal under the assumption of a normal distribution with estimators that are optimal for, or at least derived for, other distributions: for example using the t-distribution with low degrees of freedom (high kurtosis; degrees of freedom between 4 and 6 have often been found to be useful in practice Potter or Ceramic Artist Truman Bedell from Rexton, has interests which include ceramics, best property developers in singapore developers in singapore and scrabble. Was especially enthused after visiting Alejandro de Humboldt National Park.) or with a mixture of two or more distributions.

Robust estimates have been studied for the following problems:

- estimating location parameters

- estimating scale parameters

- estimating regression coefficients

- estimation of model-states in models expressed in state-space form, for which the standard method is equivalent to a Kalman filter.

Examples

- The median is a robust measure of central tendency, while the mean is not; for instance, the median has a breakdown point of 50%, while the mean has a breakdown point of 0% (a single large sample can throw it off).

- The median absolute deviation and interquartile range are robust measures of statistical dispersion, while the standard deviation and range are not.

Trimmed estimators and Winsorised estimators are general methods to make statistics more robust. L-estimators are a general class of simple statistics, often robust, while M-estimators are a general class of robust statistics, and are now the preferred solution, though they can be quite involved to calculate.

Definition

Template:Expand section There are various definitions of a "robust statistic." Strictly speaking, a robust statistic is resistant to errors in the results, produced by deviations from assumptions[1] (e.g., of normality). This means that if the assumptions are only approximately met, the robust estimator will still have a reasonable efficiency, and reasonably small bias, as well as being asymptotically unbiased, meaning having a bias tending towards 0 as the sample size tends towards infinity.

One of the most important cases is distributional robustness.[1] Classical statistical procedures are typically sensitive to "longtailedness" (e.g., when the distribution of the data has longer tails than the assumed normal distribution). Thus, in the context of robust statistics, distributionally robust and outlier-resistant are effectively synonymous.[1] For one perspective on research in robust statistics up to 2000, see Portnoy and He (2000).

A related topic is that of resistant statistics, which are resistant to the effect of extreme scores.

Example: speed of light data

Gelman et al. in Bayesian Data Analysis (2004) consider a data set relating to speed of light measurements made by Simon Newcomb. The data sets for that book can be found via the Classic data sets page, and the book's website contains more information on the data.

Although the bulk of the data look to be more or less normally distributed, there are two obvious outliers. These outliers have a large effect on the mean, dragging it towards them, and away from the center of the bulk of the data. Thus, if the mean is intended as a measure of the location of the center of the data, it is, in a sense, biased when outliers are present.

Also, the distribution of the mean is known to be asymptotically normal due to the central limit theorem. However, outliers can make the distribution of the mean non-normal even for fairly large data sets. Besides this non-normality, the mean is also inefficient in the presence of outliers and less variable measures of location are available.

Estimation of location

The plot below shows a density plot of the speed of light data, together with a rug plot (panel (a)). Also shown is a normal Q–Q plot (panel (b)). The outliers are clearly visible in these plots.

Panels (c) and (d) of the plot show the bootstrap distribution of the mean (c) and the 10% trimmed mean (d). The trimmed mean is a simple robust estimator of location that deletes a certain percentage of observations (10% here) from each end of the data, then computes the mean in the usual way. The analysis was performed in R and 10,000 bootstrap samples were used for each of the raw and trimmed means.

The distribution of the mean is clearly much wider than that of the 10% trimmed mean (the plots are on the same scale). Also note that whereas the distribution of the trimmed mean appears to be close to normal, the distribution of the raw mean is quite skewed to the left. So, in this sample of 66 observations, only 2 outliers cause the central limit theorem to be inapplicable.

Robust statistical methods, of which the trimmed mean is a simple example, seek to outperform classical statistical methods in the presence of outliers, or, more generally, when underlying parametric assumptions are not quite correct.

Whilst the trimmed mean performs well relative to the mean in this example, better robust estimates are available. In fact, the mean, median and trimmed mean are all special cases of M-estimators. Details appear in the sections below.

Estimation of scale

Mining Engineer (Excluding Oil ) Truman from Alma, loves to spend time knotting, largest property developers in singapore developers in singapore and stamp collecting. Recently had a family visit to Urnes Stave Church.

The outliers in the speed of light data have more than just an adverse effect on the mean; the usual estimate of scale is the standard deviation, and this quantity is even more badly affected by outliers because the squares of the deviations from the mean go into the calculation, so the outliers' effects are exacerbated.

The plots below show the bootstrap distributions of the standard deviation, median absolute deviation (MAD) and Qn estimator of scale (Rousseeuw and Croux, 1993). The plots are based on 10000 bootstrap samples for each estimator, with some Gaussian noise added to the resampled data (smoothed bootstrap). Panel (a) shows the distribution of the standard deviation, (b) of the MAD and (c) of Qn.

The distribution of standard deviation is erratic and wide, a result of the outliers. The MAD is better behaved, and Qn is a little bit more efficient than MAD. This simple example demonstrates that when outliers are present, the standard deviation cannot be recommended as an estimate of scale.

Manual screening for outliers

Traditionally, statisticians would manually screen data for outliers, and remove them, usually checking the source of the data to see if the outliers were erroneously recorded. Indeed, in the speed of light example above, it is easy to see and remove the two outliers prior to proceeding with any further analysis. However, in modern times, data sets often consist of large numbers of variables being measured on large numbers of experimental units. Therefore, manual screening for outliers is often impractical.

Outliers can often interact in such a way that they mask each other. As a simple example, consider a small univariate data set containing one modest and one large outlier. The estimated standard deviation will be grossly inflated by the large outlier. The result is that the modest outlier looks relatively normal. As soon as the large outlier is removed, the estimated standard deviation shrinks, and the modest outlier now looks unusual.

This problem of masking gets worse as the complexity of the data increases. For example, in regression problems, diagnostic plots are used to identify outliers. However, it is common that once a few outliers have been removed, others become visible. The problem is even worse in higher dimensions.

Robust methods provide automatic ways of detecting, downweighting (or removing), and flagging outliers, largely removing the need for manual screening. Care must be taken; initial data showing the ozone hole first appearing over Antarctica were rejected as outliers by non-human screening[2]

Variety of applications

Although this article deals with general principles for univariate statistical methods, robust methods also exist for regression problems, generalized linear models, and parameter estimation of various distributions.

Measures of robustness

The basic tools used to describe and measure robustness are, the breakdown point, the influence function and the sensitivity curve.

Breakdown point

Intuitively, the breakdown point of an estimator is the proportion of incorrect observations (e.g. arbitrarily large observations) an estimator can handle before giving an incorrect (e.g., arbitrarily large) result. For example, given independent random variables and the corresponding realizations , we can use to estimate the mean. Such an estimator has a breakdown point of 0 because we can make arbitrarily large just by changing any of .

The higher the breakdown point of an estimator, the more robust it is. Intuitively, we can understand that a breakdown point cannot exceed 50% because if more than half of the observations are contaminated, it is not possible to distinguish between the underlying distribution and the contaminating distribution. Therefore, the maximum breakdown point is 0.5 and there are estimators which achieve such a breakdown point. For example, the median has a breakdown point of 0.5. The X% trimmed mean has breakdown point of X%, for the chosen level of X. Huber (1981) and Maronna et al. (2006) contain more details. The level and the power breakdown points of tests are investigated in He et al. (1990).

Statistics with high breakdown points are sometimes called resistant statistics.[3]

Example: speed of light data

In the speed of light example, removing the two lowest observations causes the mean to change from 26.2 to 27.75, a change of 1.55. The estimate of scale produced by the Qn method is 6.3. We can divide this by the square root of the sample size to get a robust standard error, and we find this quantity to be 0.78. Thus, the change in the mean resulting from removing two outliers is approximately twice the robust standard error.

The 10% trimmed mean for the speed of light data is 27.43. Removing the two lowest observations and recomputing gives 27.67. Clearly, the trimmed mean is less affected by the outliers and has a higher breakdown point.

Notice that if we replace the lowest observation, -44, by -1000, the mean becomes 11.73, whereas the 10% trimmed mean is still 27.43. In many areas of applied statistics, it is common for data to be log-transformed to make them near symmetrical. Very small values become large negative when log-transformed, and zeroes become negatively infinite. Therefore, this example is of practical interest.

Empirical influence function

My name is Winnie and I am studying Anthropology and Sociology and Modern Languages and Classics at Rillieux-La-Pape / France.

Also visit my web site ... hostgator1centcoupon.info

Before you choose any particular company it is vital to understand in full how the different plans can vary. There is no other better method than to create a message board so that people can relax and "chill" on your website and check out your articles more. You should read the HostGator review, even before registering with a web hosting company. but Hostgator in addition considers the surroundings. You can even use a Hostgator reseller coupon for unlimited web hosting at HostGator! Most of individuals by no means go for yearly subscription and choose month to month subscription. Several users commented that this was the deciding factor in picking HostGator but in any case there is a 45 day Money Back Guarantee and there is no contract so you can cancel at any time. GatorBill is able to send you an email notice about the new invoice. In certain cases a dedicated server can offer less overhead and a bigger revenue in investments. With the plan come a Free Billing Executive, Free sellers account and Free Hosting Templates.

This is one of the only things that require you to spend a little money to make money. Just go make an account, get a paypal account, and start selling. To go one step beyond just affiliating products and services is to create your own and sell it through your blog. Not great if you really enjoy trying out all the themes. Talking in real time having a real person causes it to be personal helping me personally to sort out how to proceed. The first step I took was search for a discount code, as I did with HostGator. Using a HostGator coupon is a beneficial method to get started. As long as the necessities are able to preserve the horizontal functionality of your site, you would pretty much be fine.

The empirical influence function is a measure of the dependence of the estimator on the value of one of the points in the sample. It is a model-free measure in the sense that it simply relies on calculating the estimator again with a different sample. On the right is Tukey's biweight function, which, as we will later see, is an example of what a "good" (in a sense defined later on) empirical influence function should look like.

In mathematical terms, an influence function is defined as a vector in the space of the estimator, which is in turn defined for a sample which is a subset of the population:

- is a probability space,

- is a measure space (state space),

- is a parameter space of dimension ,

- is a measure space,

For example,

The definition of an empirical influence function is: Let and are iid and is a sample from these variables. is an estimator. Let . The empirical influence function at observation is defined by:

What this actually means is that we are replacing the i-th value in the sample by an arbitrary value and looking at the output of the estimator. Alternatively, the EIF is defined as the (scaled by n+1 instead of n) effect on the estimator of adding the point to the sample.[4]

Influence function and sensitivity curve

Instead of relying solely on the data, we could use the distribution of the random variables. The approach is quite different from that of the previous paragraph. What we are now trying to do is to see what happens to an estimator when we change the distribution of the data slightly: it assumes a distribution, and measures sensitivity to change in this distribution. By contrast, the empirical influence assumes a sample set, and measures sensitivity to change in the samples.

Let be a convex subset of the set of all finite signed measures on . We want to estimate the parameter of a distribution in . Let the functional be the asymptotic value of some estimator sequence . We will suppose that this functional is Fisher consistent, i.e. . This means that at the model , the estimator sequence asymptotically measures the correct quantity.

Let be some distribution in . What happens when the data doesn't follow the model exactly but another, slightly different, "going towards" ?

which is the one-sided directional derivative of at , in the direction of .

Let . is the probability measure which gives mass 1 to . We choose . The influence function is then defined by:

It describes the effect of an infinitesimal contamination at the point on the estimate we are seeking, standardized by the mass of the contamination (the asymptotic bias caused by contamination in the observations). For a robust estimator, we want a bounded influence function, that is, one which does not go to infinity as x becomes arbitrarily large.

Desirable properties

Properties of an influence function which bestow it with desirable performance are:

Rejection point

Gross-error sensitivity

Local-shift sensitivity

This value, which looks a lot like a Lipschitz constant, represents the effect of shifting an observation slightly from to a neighbouring point , i.e., add an observation at and remove one at .

M-estimators

Mining Engineer (Excluding Oil ) Truman from Alma, loves to spend time knotting, largest property developers in singapore developers in singapore and stamp collecting. Recently had a family visit to Urnes Stave Church.

(The mathematical context of this paragraph is given in the section on empirical influence functions.)

Historically, several approaches to robust estimation were proposed, including R-estimators and L-estimators. However, M-estimators now appear to dominate the field as a result of their generality, high breakdown point, and their efficiency. See Huber (1981).

M-estimators are a generalization of maximum likelihood estimators (MLEs). What we try to do with MLE's is to maximize or, equivalently, minimize . In 1964, Huber proposed to generalize this to the minimization of , where is some function. MLE are therefore a special case of M-estimators (hence the name: "Maximum likelihood type" estimators).

Minimizing can often be done by differentiating and solving , where (if has a derivative).

Several choices of and have been proposed. The two figures below show four functions and their corresponding functions.

For squared errors, increases at an accelerating rate, whilst for absolute errors, it increases at a constant rate. When Winsorizing is used, a mixture of these two effects is introduced: for small values of x, increases at the squared rate, but once the chosen threshold is reached (1.5 in this example), the rate of increase becomes constant. This Winsorised estimator is also known as the Huber loss function.

Tukey's biweight (also known as bisquare) function behaves in a similar way to the squared error function at first, but for larger errors, the function tapers off.

Properties of M-estimators

Notice that M-estimators do not necessarily relate to a probability density function. Therefore, off-the-shelf approaches to inference that arise from likelihood theory can not, in general, be used.

It can be shown that M-estimators are asymptotically normally distributed, so that as long as their standard errors can be computed, an approximate approach to inference is available.

Since M-estimators are normal only asymptotically, for small sample sizes it might be appropriate to use an alternative approach to inference, such as the bootstrap. However, M-estimates are not necessarily unique (i.e., there might be more than one solution that satisfies the equations). Also, it is possible that any particular bootstrap sample can contain more outliers than the estimator's breakdown point. Therefore, some care is needed when designing bootstrap schemes.

Of course, as we saw with the speed of light example, the mean is only normally distributed asymptotically and when outliers are present the approximation can be very poor even for quite large samples. However, classical statistical tests, including those based on the mean, are typically bounded above by the nominal size of the test. The same is not true of M-estimators and the type I error rate can be substantially above the nominal level.

These considerations do not "invalidate" M-estimation in any way. They merely make clear that some care is needed in their use, as is true of any other method of estimation.

Influence function of an M-estimator

It can be shown that the influence function of an M-estimator is proportional to (see Huber, 1981 (and 2004), page 45), which means we can derive the properties of such an estimator (such as its rejection point, gross-error sensitivity or local-shift sensitivity) when we know its function.

Choice of and

In many practical situations, the choice of the function is not critical to gaining a good robust estimate, and many choices will give similar results that offer great improvements, in terms of efficiency and bias, over classical estimates in the presence of outliers (Huber, 1981).

Theoretically, functions are to be preferred, and Tukey's biweight (also known as bisquare) function is a popular choice. Maronna et al. (2006) recommend the biweight function with efficiency at the normal set to 85%.

Robust parametric approaches

M-estimators do not necessarily relate to a density function and so are not fully parametric. Fully parametric approaches to robust modeling and inference, both Bayesian and likelihood approaches, usually deal with heavy tailed distributions such as Student's t-distribution.

For the t-distribution with degrees of freedom, it can be shown that

For , the t-distribution is equivalent to the Cauchy distribution. Notice that the degrees of freedom is sometimes known as the kurtosis parameter. It is the parameter that controls how heavy the tails are. In principle, can be estimated from the data in the same way as any other parameter. In practice, it is common for there to be multiple local maxima when is allowed to vary. As such, it is common to fix at a value around 4 or 6. The figure below displays the -function for 4 different values of .

Example: speed of light data

For the speed of light data, allowing the kurtosis parameter to vary and maximizing the likelihood, we get

Fixing and maximizing the likelihood gives

Related concepts

A pivotal quantity is a function of data, whose underlying population distribution is a member of a parametric family, that is not dependent on the values of the parameters. An ancillary statistic is such a function that is also a statistic, meaning that it is computed in terms of the data alone. Such functions are robust to parameters in the sense that they are independent of the values of the parameters, but not robust to the model in the sense that they assume an underlying model (parametric family), and in fact such functions are often very sensitive to violations of the model assumptions. Thus test statistics, frequently constructed in terms of these to not be sensitive to assumptions about parameters, are still very sensitive to model assumptions.

Replacing outliers and missing values

If there are relatively few missing points, there are some models which can be used to estimate values to complete the series, such as replacing missing values with the mean or median of the data. Simple linear regression can also be used to estimate missing values (MacDonald and Zucchini, 1997; Harvey, 1989). In addition, outliers can sometimes be accommodated in the data through the use of trimmed means, other scale estimators apart from standard deviation (e.g., MAD) and Winsorization (McBean and Rovers, 1998). In calculations of a trimmed mean, a fixed percentage of data is dropped from each end of an ordered data, thus eliminating the outliers. The mean is then calculated using the remaining data. Winsorizing involves accommodating an outlier by replacing it with the next highest or next smallest value as appropriate (Rustum & Adeloye, 2007).[5]

However, using these types of models to predict missing values or outliers in a long time series is difficult and often unreliable, particularly if the number of values to be in-filled is relatively high in comparison with total record length. The accuracy of the estimate depends on how good and representative the model is and how long the period of missing values extends (Rosen and Lennox, 2001). The in a case of a dynamic process, so any variable is dependent, not just on the historical time series of the same variable but also on several other variables or parameters of the process. In other words, the problem is an exercise in multivariate analysis rather than the univariate approach of most of the traditional methods of estimating missing values and outliers; a multivariate model will therefore be more representative than a univariate one for predicting missing values. The kohonin self organising map (KSOM) offers a simple and robust multivariate model for data analysis, thus providing good possibilities to estimate missing values, taking into account its relationship or correlation with other pertinent variables in the data record (Rustum & Adeloye 2007).

Standard Kalman filters are not robust to outliers. To this end Ting, Theodorou and Schaal have recently shown that a modification of Masreliez's theorem can deal with outliers.[6]

One common approach to handle outliers in data analysis is to perform outlier detection first, followed by an efficient estimation method (e.g., the least squares). While this approach is often useful, one must keep in mind two challenges. First, an outlier deletion method that relies on a non-robust initial fit can suffer from the effect of masking, that is, a group of outliers can mask each other and escape detection (Rousseeuw and Leroy, 2007). Second, if a high breakdown initial fit is used for outlier detection, the follow-up analysis might inherit some of the inefficiencies of the initial estimator (He and Portnoy, 1992).

See also

References

43 year old Petroleum Engineer Harry from Deep River, usually spends time with hobbies and interests like renting movies, property developers in singapore new condominium and vehicle racing. Constantly enjoys going to destinations like Camino Real de Tierra Adentro.

- Robust Statistics - The Approach Based on Influence Functions, Frank R. Hampel, Elvezio M. Ronchetti, Peter J. Rousseeuw and Werner A. Stahel, Wiley, 1986 (republished in paperback, 2005)

- Robust Statistics, Peter. J. Huber, Wiley, 1981 (republished in paperback, 2004)

- Robust Regression and Outlier Detection, Peter J. Rousseeuw and Annick M. Leroy, Wiley, 1987 (republished in paperback, 2003)

- 20 year-old Real Estate Agent Rusty from Saint-Paul, has hobbies and interests which includes monopoly, property developers in singapore and poker. Will soon undertake a contiki trip that may include going to the Lower Valley of the Omo.

My blog: http://www.primaboinca.com/view_profile.php?userid=5889534 Template:MR - Robust Statistics - Theory and Methods, Ricardo Maronna, R. Douglas Martin and Victor Yohai, Wiley, 2006

- Rousseeuw, P.J. and Croux, C. "Alternatives to the Median Absolute Deviation," Journal of the American Statistical Association 88 (1993), 1273

- Many property agents need to declare for the PIC grant in Singapore. However, not all of them know find out how to do the correct process for getting this PIC scheme from the IRAS. There are a number of steps that you need to do before your software can be approved.

Naturally, you will have to pay a safety deposit and that is usually one month rent for annually of the settlement. That is the place your good religion deposit will likely be taken into account and will kind part or all of your security deposit. Anticipate to have a proportionate amount deducted out of your deposit if something is discovered to be damaged if you move out. It's best to you'll want to test the inventory drawn up by the owner, which can detail all objects in the property and their condition. If you happen to fail to notice any harm not already mentioned within the inventory before transferring in, you danger having to pay for it yourself.

In case you are in search of an actual estate or Singapore property agent on-line, you simply should belief your intuition. It's because you do not know which agent is nice and which agent will not be. Carry out research on several brokers by looking out the internet. As soon as if you end up positive that a selected agent is dependable and reliable, you can choose to utilize his partnerise in finding you a home in Singapore. Most of the time, a property agent is taken into account to be good if he or she locations the contact data on his website. This may mean that the agent does not mind you calling them and asking them any questions relating to new properties in singapore in Singapore. After chatting with them you too can see them in their office after taking an appointment.

Have handed an trade examination i.e Widespread Examination for House Brokers (CEHA) or Actual Property Agency (REA) examination, or equal; Exclusive brokers are extra keen to share listing information thus making certain the widest doable coverage inside the real estate community via Multiple Listings and Networking. Accepting a severe provide is simpler since your agent is totally conscious of all advertising activity related with your property. This reduces your having to check with a number of agents for some other offers. Price control is easily achieved. Paint work in good restore-discuss with your Property Marketing consultant if main works are still to be done. Softening in residential property prices proceed, led by 2.8 per cent decline within the index for Remainder of Central Region

Once you place down the one per cent choice price to carry down a non-public property, it's important to accept its situation as it is whenever you move in – faulty air-con, choked rest room and all. Get round this by asking your agent to incorporate a ultimate inspection clause within the possibility-to-buy letter. HDB flat patrons routinely take pleasure in this security net. "There's a ultimate inspection of the property two days before the completion of all HDB transactions. If the air-con is defective, you can request the seller to repair it," says Kelvin.

15.6.1 As the agent is an intermediary, generally, as soon as the principal and third party are introduced right into a contractual relationship, the agent drops out of the image, subject to any problems with remuneration or indemnification that he could have against the principal, and extra exceptionally, against the third occasion. Generally, agents are entitled to be indemnified for all liabilities reasonably incurred within the execution of the brokers´ authority.

To achieve the very best outcomes, you must be always updated on market situations, including past transaction information and reliable projections. You could review and examine comparable homes that are currently available in the market, especially these which have been sold or not bought up to now six months. You'll be able to see a pattern of such report by clicking here It's essential to defend yourself in opposition to unscrupulous patrons. They are often very skilled in using highly unethical and manipulative techniques to try and lure you into a lure. That you must also protect your self, your loved ones, and personal belongings as you'll be serving many strangers in your home. Sign a listing itemizing of all of the objects provided by the proprietor, together with their situation. HSR Prime Recruiter 2010 - He, X. and Portnoy, S. "Reweighted LS Estimators Converge at the same Rate as the Initial Estimator," Annals of Statistics Vol. 20, No. 4 (1992), 2161–2167

- He, X., Simpson, D.G. and Portnoy, S. "Breakdown Robustness of Tests," Journal of the American Statistical Association Vol. 85, No. 40, (1990), 446-452

- Portnoy S. and He, X. "A Robust Journey in the New Millennium," Journal of the American Statistical Association Vol. 95, No. 452 (Dec., 2000), 1331–1335

- Stephen M. Stigler. "The Changing History of Robustness," The American Statistician November 1, 2010, 64(4): 277-281. 21 year-old Glazier James Grippo from Edam, enjoys hang gliding, industrial property developers in singapore developers in singapore and camping. Finds the entire world an motivating place we have spent 4 months at Alejandro de Humboldt National Park.

- Wilcox, R. "Introduction to Robust Estimation & Hypothesis Testing," Academic Press, 201

External links

- Brian Ripley's robust statistics course notes.

- Nick Fieller's course notes on Statistical Modelling and Computation contain material on robust regression.

- David Olive's site contains course notes on robust statistics and some data sets.

- Online experiments using R and JSXGraph

- ↑ 1.0 1.1 1.2 Robust Statistics, Peter. J. Huber, Wiley, 1981 (republished in paperback, 2004), page 1.

- ↑ When was the ozone hole discovered, Weather Undergroundhttp://www.wunderground.com/climate/holefaq.asp

- ↑ Resistant statistics, David B. Stephenson

- ↑ See Ollina and Koivunen http://cc.oulu.fi/~esollila/papers/ssp03fin.pdf

- ↑ Rustum R., and A. J. Adeloye (2007); Replacing outliers and missing values from activated sludge data using Kohonen Self Organizing Map, Journal of Environmental Engineering, 133 (9), 909-916.

- ↑ Jo-anne Ting, Evangelos Theodorou and Stefan Schaal; "A Kalman filter for robust outlier detection", International Conference on Intelligent Robots and Systems - IROS , pp. 1514-1519 (2007).