|

|

| Line 1: |

Line 1: |

| {{distinguish2|[[combinational logic]], a topic in digital electronics}} | | {{Trigonometry}} |

| {{bots|deny=D6,AWB}}

| | In [[mathematics]], tables of [[trigonometric function]]s are useful in a number of areas. Before the existence of [[pocket calculator]]s, '''trigonometric tables''' were essential for [[navigation]], [[science]] and [[engineering]]. The calculation of [[mathematical table]]s was an important area of study, which led to the development of the [[history of computing|first mechanical computing devices]]. |

| '''Combinatory logic''' is a notation to eliminate the need for [[Variable (mathematics)|variables]] in [[mathematical logic]]. It was introduced by [[Moses Schönfinkel]] and [[Haskell Curry]] and has more recently been used in [[computer science]] as a theoretical model of [[computation]] and also as a basis for the design of [[functional programming languages]]. It is based on '''combinators'''. A combinator is a [[higher-order function]] that uses only function application and earlier defined combinators to define a result from its arguments.

| |

|

| |

|

| ==Combinatory logic in mathematics==

| | Modern computers and pocket calculators now generate trigonometric function values on demand, using special libraries of mathematical code. Often, these libraries use pre-calculated tables internally, and compute the required value by using an appropriate interpolation method. Interpolation of simple look-up tables of trigonometric functions is still used in [[computer graphics]], where only modest accuracy may be required and speed is often paramount. |

| Combinatory logic was originally intended as a 'pre-logic' that would clarify the role of [[quantifier|quantified variables]] in logic, essentially by eliminating them. Another way of eliminating quantified variables is [[Willard Van Orman Quine|Quine's]] [[predicate functor logic]]. While the [[expressive power]] of combinatory logic typically exceeds that of [[first-order logic]], the expressive power of [[predicate functor logic]] is identical to that of first order logic ([[#Quine 1960 1966|Quine 1960, 1966, 1976]]).

| |

|

| |

|

| The original inventor of combinatory logic, [[Moses Schönfinkel]], published nothing on combinatory logic after his original 1924 paper, and largely ceased to publish after [[Joseph Stalin]] consolidated his power in 1929. Curry rediscovered the combinators while working as an instructor at the [[Princeton University]] in late 1927.<ref name="Seldin 2006">{{cite journal|last=Seldin|first=Jonathan|title=The Logic of Curry and Church}}</ref> In the latter 1930s, [[Alonzo Church]] and his students at [[Princeton University|Princeton]] invented a rival formalism for functional abstraction, the [[lambda calculus]], which proved more popular than combinatory logic. The upshot of these historical contingencies was that until theoretical computer science began taking an interest in combinatory logic in the 1960s and 1970s, nearly all work on the subject was by [[Haskell Curry]] and his students, or by [[Robert Feys]] in [[Belgium]]. Curry and Feys (1958), and Curry ''et al.'' (1972) survey the early history of combinatory logic. For a more modern parallel treatment of combinatory logic and the lambda calculus, see [[Henk Barendregt|Barendregt]]<!-- correct person? --> (1984), who also reviews the [[model theory|models]] [[Dana Scott]] devised for combinatory logic in the 1960s and 1970s.

| | Another important application of trigonometric tables and generation schemes is for [[fast Fourier transform]] (FFT) algorithms, where the same trigonometric function values (called ''twiddle factors'') must be evaluated many times in a given transform, especially in the common case where many transforms of the same size are computed. In this case, calling generic library routines every time is unacceptably slow. One option is to call the library routines once, to build up a table of those trigonometric values that will be needed, but this requires significant memory to store the table. The other possibility, since a regular sequence of values is required, is to use a recurrence formula to compute the trigonometric values on the fly. Significant research has been devoted to finding accurate, stable recurrence schemes in order to preserve the accuracy of the FFT (which is very sensitive to trigonometric errors). |

| <!-- This section needs a LOT of filling in!!! -->

| |

|

| |

|

| ==Combinatory logic in computing== | | ==On-demand computation== |

| In [[computer science]], combinatory logic is used as a simplified model of [[computation]], used in [[computability theory]] and [[proof theory]]. Despite its simplicity, combinatory logic captures many essential features of computation.

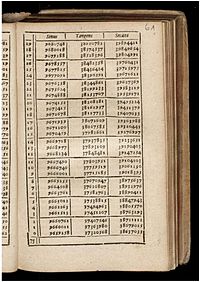

| | [[Image:Bernegger Manuale 137.jpg|thumb|right|200px|A page from a 1619 book of [[mathematical table]]s]] |

| | Modern computers and calculators use a variety of techniques to provide trigonometric function values on demand for arbitrary angles (Kantabutra, 1996). One common method, especially on higher-end processors with [[Floating point|floating-point]] units, is to combine a [[polynomial]] or [[rational function|rational]] [[approximation theory|approximation]] (such as [[Chebyshev approximation]], best uniform approximation, and [[Padé approximant|Padé approximation]], and typically for higher or variable precisions, [[Taylor series|Taylor]] and [[Laurent series]]) with range reduction and a table lookup — they first look up the closest angle in a small table, and then use the polynomial to compute the correction. Maintaining precision while performing such interpolation is nontrivial, however; and methods like [[Gal's accurate tables]], Cody and Waite reduction, and Payne and Hanek reduction algorithms can be used for this purpose. On simpler devices that lack a [[multiplication ALU|hardware multiplier]], there is an algorithm called [[CORDIC]] (as well as related techniques) that is more efficient, since it uses only [[shift operator|shift]]s and additions. All of these methods are commonly implemented in [[computer hardware|hardware]] for performance reasons. |

|

| |

|

| Combinatory logic can be viewed as a variant of the [[lambda calculus]], in which lambda expressions (representing functional abstraction) are replaced by a limited set of ''combinators'', primitive functions from which [[bound variable]]s are absent. It is easy to transform lambda expressions into combinator expressions, and combinator reduction is much simpler than lambda reduction. Hence combinatory logic has been used to model some [[non-strict programming language|non-strict]] [[functional programming]] languages and [[graph reduction machine|hardware]]. The purest form of this view is the programming language [[Unlambda]], whose sole primitives are the S and K combinators augmented with character input/output. Although not a practical programming language, Unlambda is of some theoretical interest.

| | For [[arbitrary-precision arithmetic|very high precision]] calculations, when series-expansion convergence becomes too slow, trigonometric functions can be approximated by the [[arithmetic-geometric mean]], which itself approximates the trigonometric function by the ([[complex number|complex]]) [[elliptic integral]] (Brent, 1976). |

|

| |

|

| Combinatory logic can be given a variety of interpretations. Many early papers by Curry showed how to translate axiom sets for conventional logic into combinatory logic equations (Hindley and Meredith 1990). Dana Scott in the 1960s and 1970s showed how to marry [[model theory]] and combinatory logic.

| | Trigonometric functions of angles that are [[rational number|rational]] multiples of 2π are [[algebraic number]]s, related to [[roots of unity]], and can be computed with a [[polynomial]] [[root-finding algorithm]] in the [[complex plane]]. For example, the cosine and sine of 2π ⋅ 5/37 are the [[real part|real]] and [[imaginary part]]s, respectively, of a 37th root of unity, corresponding to a root of a [[degree of a polynomial|degree]]-37 polynomial ''x''<sup>37</sup> − 1. Root-finding algorithms such as [[Newton's method]] are much simpler than the arithmetic-geometric mean algorithms above while converging at a similar asymptotic rate; the latter algorithms are required for [[transcendental number|transcendental]] trigonometric constants, however. |

|

| |

|

| == Summary of the lambda calculus == | | ==Half-angle and angle-addition formulas== |

| {{main|lambda calculus}}

| |

|

| |

|

| The lambda calculus is

| | Historically, the earliest method by which trigonometric tables were computed, and probably the most common until the advent of computers, was to repeatedly apply the half-angle and angle-addition [[Trigonometric identity|trigonometric identities]] starting from a known value (such as sin(π/2) = 1, cos(π/2) = 0). This method was used by the ancient astronomer [[Ptolemy]], who derived them in the ''[[Almagest]]'', a treatise on astronomy. In modern form, the identities he derived are stated as follows (with signs determined by the quadrant in which ''x'' lies): |

| concerned with objects called ''lambda-terms'', which are strings of

| |

| symbols of one of the following forms:

| |

|

| |

|

| * ''v''

| | :<math>\cos\left(\frac{x}{2}\right) = \pm \sqrt{\tfrac{1}{2}(1 + \cos x)}</math> |

| * ''λv''.''E1''

| |

| * (''E1'' ''E2'')

| |

|

| |

|

| where ''v'' is a variable name drawn from a predefined infinite set of

| | :<math>\sin\left(\frac{x}{2}\right) = \pm \sqrt{\tfrac{1}{2}(1 - \cos x)}</math> |

| variable names, and ''E1'' and ''E2'' are lambda-terms.

| |

|

| |

|

| Terms of the form ''λv.E1'' are called ''abstractions''. The variable ''v'' is

| | :<math>\sin(x \pm y) = \sin(x) \cos(y) \pm \cos(x) \sin(y)\,</math> |

| called the [[formal parameter]] of the abstraction, and ''E1'' is the ''body''

| |

| of the abstraction. The term ''λv.E1'' represents the function which, applied

| |

| to an argument, binds the formal parameter ''v'' to the argument and then

| |

| computes the resulting value of ''E1''---that is, it returns ''E1'', with

| |

| every occurrence of ''v'' replaced by the argument.

| |

|

| |

|

| Terms of the form ''(E1 E2)'' are called ''applications''. Applications model

| | :<math>\cos(x \pm y) = \cos(x) \cos(y) \mp \sin(x) \sin(y)\,</math> |

| function invocation or execution: the function represented by ''E1'' is to be

| |

| invoked, with ''E2'' as its argument, and the result is computed. If ''E1''

| |

| (sometimes called the ''applicand'') is an abstraction, the term may be | |

| ''reduced'': ''E2'', the argument, may be substituted into the body of ''E1''

| |

| in place of the formal parameter of ''E1'', and the result is a new lambda

| |

| term which is ''equivalent'' to the old one. If a lambda term contains no

| |

| subterms of the form ''((λv.E1) E2)'' then it cannot be reduced, and is said to

| |

| be in [[Beta normal form|normal form]].

| |

|

| |

|

| The expression ''E''[''v'' := ''a''] represents the result of taking the term ''E'' and replacing all free occurrences of ''v'' with ''a''. Thus we write

| | These were used to construct [[Ptolemy's table of chords]], which was applied to astronomical problems. |

|

| |

|

| :(''λv.E'' ''a'') => ''E''[''v'' := ''a''] | | Various other permutations on these identities are possible: for example, some early trigonometric tables used not sine and cosine, but sine and [[versine]]). |

|

| |

|

| By convention, we take ''(a b c d ... z)'' as short for ''(...(((a b) c) d) ... z)''. (i.e., application is [[Associative#Non-associativity|left associative]].)

| | ==A quick, but inaccurate, approximation== |

|

| |

|

| The motivation for this definition of reduction is that it captures

| | A quick, but inaccurate, algorithm for calculating a table of ''N'' approximations ''s''<sub>''n''</sub> for [[sine|sin]](2[[Pi|π]]''n''/''N'') and ''c''<sub>''n''</sub> for [[cosine|cos]](2π''n''/''N'') is: |

| the essential behavior of all mathematical functions. For example,

| |

| consider the function that computes the square of a number. We might

| |

| write

| |

|

| |

|

| :The square of ''x'' is ''x''*''x'' | | :''s''<sub>0</sub> = 0 |

| | :''c''<sub>0</sub> = 1 |

| | :''s''<sub>''n''+1</sub> = ''s''<sub>''n''</sub> + ''d'' × ''c''<sub>''n''</sub> |

| | :''c''<sub>''n''+1</sub> = ''c''<sub>''n''</sub> − ''d'' × ''s''<sub>''n''</sub> |

| | for ''n'' = 0,...,''N'' − 1, where ''d'' = 2π/''N''. |

|

| |

|

| (Using "*" to indicate multiplication.) ''x'' here is the [[formal parameter]] of the function. To evaluate the square for a particular

| | This is simply the [[Numerical ordinary differential equations#The Euler method|Euler method]] for integrating the [[differential equation]]: |

| argument, say 3, we insert it into the definition in place of the

| |

| formal parameter:

| |

|

| |

|

| :The square of 3 is 3*3 | | :<math>ds/dt = c</math> |

| | :<math>dc/dt = -s</math> |

|

| |

|

| To evaluate the resulting expression 3*3, we would have to resort to

| | with initial conditions ''s''(0) = 0 and ''c''(0) = 1, whose analytical solution is ''s'' = sin(''t'') and ''c'' = cos(''t''). |

| our knowledge of multiplication and the number 3. Since any

| |

| computation is simply a composition of the evaluation of suitable

| |

| functions on suitable primitive arguments, this simple substitution

| |

| principle suffices to capture the essential mechanism of computation.

| |

| Moreover, in the lambda calculus, notions such as '3' and '*' can be

| |

| represented without any need for externally defined primitive

| |

| operators or constants. It is possible to identify terms in the

| |

| lambda calculus, which, when suitably interpreted, behave like the

| |

| number 3 and like the multiplication operator, q.v. [[Church encoding]].

| |

|

| |

|

| The lambda calculus is known to be computationally equivalent in power to

| | Unfortunately, this is not a useful algorithm for generating sine tables because it has a significant error, proportional to 1/''N''. |

| many other plausible models for computation (including [[Turing machine]]s); that is, any calculation that can be accomplished in any

| |

| of these other models can be expressed in the lambda calculus, and

| |

| vice versa. According to the [[Church-Turing thesis]], both models

| |

| can express any possible computation.

| |

|

| |

|

| It is perhaps surprising that lambda-calculus can represent any

| | For example, for ''N'' = 256 the maximum error in the sine values is ~0.061 (''s''<sub>202</sub> = −1.0368 instead of −0.9757). For ''N'' = 1024, the maximum error in the sine values is ~0.015 (''s''<sub>803</sub> = −0.99321 instead of −0.97832), about 4 times smaller. If the sine and cosine values obtained were to be plotted, this algorithm would draw a logarithmic spiral rather than a circle. |

| conceivable computation using only the simple notions of function

| |

| abstraction and application based on simple textual substitution of

| |

| terms for variables. But even more remarkable is that abstraction is

| |

| not even required. ''Combinatory logic'' is a model of computation

| |

| equivalent to the lambda calculus, but without abstraction. The advantage

| |

| of this is that evaluating expressions in lambda calculus is quite complicated

| |

| because the semantics of substitution must be specified with great care to

| |

| avoid variable capture problems. In contrast, evaluating expressions in

| |

| combinatory logic is much simpler, because there is no notion of substitution.

| |

|

| |

|

| == Combinatory calculi == | | ==A better, but still imperfect, recurrence formula== |

|

| |

|

| Since abstraction is the only way to manufacture functions in the

| | A simple recurrence formula to generate trigonometric tables is based on [[Euler's formula]] and the relation: |

| lambda calculus, something must replace it in the combinatory

| |

| calculus. Instead of abstraction, combinatory calculus provides a

| |

| limited set of primitive functions out of which other functions may be

| |

| built.

| |

|

| |

|

| === Combinatory terms === | | :<math>e^{i(\theta + \Delta\theta)} = e^{i\theta} \times e^{i\Delta\theta}</math> |

|

| |

|

| A combinatory term has one of the following forms:

| | This leads to the following recurrence to compute trigonometric values ''s''<sub>''n''</sub> and ''c''<sub>''n''</sub> as above: |

| *''x''

| |

| *''P''

| |

| *(''E<sub>1</sub>'' ''E<sub>2</sub>'')

| |

| where ''x'' is a variable, ''P'' is one of the primitive functions, and (''E<sub>1</sub>'' ''E<sub>2</sub>'') is the application of combinatory terms ''E<sub>1</sub>'' and ''E<sub>2</sub>''. The primitive functions themselves are ''combinators'', or functions that, when seen as lambda terms, contain no [[free variable]]s.

| |

| To shorten the notations, a general convention is that (''E<sub>1</sub>'' ''E<sub>2</sub>'' ''E<sub>3</sub>'' ... ''E<sub>n</sub>''), or even ''E<sub>1</sub>'' ''E<sub>2</sub>'' ''E<sub>3</sub>''... ''E''<sub>n</sub>, denotes the term (...((''E<sub>1</sub>'' ''E<sub>2</sub>'') ''E<sub>3</sub>'')... ''E<sub>n</sub>''). This is the same general convention (left-associativity) as for multiple application in lambda calculus.

| |

|

| |

|

| === Reduction in combinatory logic === | | :''c''<sub>0</sub> = 1 |

| | :''s''<sub>0</sub> = 0 |

| | :''c''<sub>''n''+1</sub> = ''w''<sub>''r''</sub> ''c''<sub>''n''</sub> − ''w''<sub>''i''</sub> ''s''<sub>''n''</sub> |

| | :''s''<sub>''n''+1</sub> = ''w''<sub>''i''</sub> ''c''<sub>''n''</sub> + ''w''<sub>''r''</sub> ''s''<sub>''n''</sub> |

| | for ''n'' = 0, ..., ''N'' − 1, where ''w''<sub>''r''</sub> = cos(2π/''N'') and ''w''<sub>''i''</sub> = sin(2π/''N''). These two starting trigonometric values are usually computed using existing library functions (but could also be found e.g. by employing [[Newton's method]] in the complex plane to solve for the primitive [[root of unity|root]] of ''z''<sup>''N''</sup> − 1). |

|

| |

|

| In combinatory logic, each primitive combinator comes with a reduction rule of the form | | This method would produce an ''exact'' table in exact arithmetic, but has errors in finite-precision [[floating-point]] arithmetic. In fact, the errors grow as O(ε ''N'') (in both the worst and average cases), where ε is the floating-point precision. |

|

| |

|

| :(''P'' ''x<sub>1</sub>'' ... ''x<sub>n</sub>'') = ''E''

| | A significant improvement is to use the following modification to the above, a trick (due to Singleton, 1967) often used to generate trigonometric values for FFT implementations: |

|

| |

|

| where ''E'' is a term mentioning only variables from the set ''x<sub>1</sub>'' ... ''x<sub>n</sub>''. It is in this way that primitive combinators behave as functions.

| | :''c''<sub>0</sub> = 1 |

| | :''s''<sub>0</sub> = 0 |

| | :''c''<sub>''n''+1</sub> = ''c''<sub>''n''</sub> − (α''c''<sub>''n''</sub> + β ''s''<sub>''n''</sub>) |

| | :''s''<sub>''n''+1</sub> = ''s''<sub>''n''</sub> + (β ''c''<sub>''n''</sub> − α ''s''<sub>''n''</sub>) |

|

| |

|

| === Examples of combinators === | | where α = 2 sin<sup>2</sup>(π/''N'') and β = sin(2π/''N''). The errors of this method are much smaller, O(ε √''N'') on average and O(ε ''N'') in the worst case, but this is still large enough to substantially degrade the accuracy of FFTs of large sizes. |

| | |

| The simplest example of a combinator is '''I''', the identity

| |

| combinator, defined by

| |

| | |

| :('''I''' ''x'') = ''x''

| |

| | |

| for all terms ''x''. Another simple combinator is '''K''', which

| |

| manufactures constant functions: ('''K''' ''x'') is the function which,

| |

| for any argument, returns ''x'', so we say

| |

| | |

| :(('''K''' ''x'') ''y'') = ''x''

| |

| | |

| for all terms ''x'' and ''y''. Or, following the convention for

| |

| multiple application,

| |

| | |

| :('''K''' ''x'' ''y'') = ''x''

| |

| | |

| A third combinator is '''S''', which is a generalized version of

| |

| application:

| |

| | |

| :('''S''' ''x'' ''y'' ''z'') = (''x'' ''z'' (''y'' ''z''))

| |

| | |

| '''S''' applies ''x'' to ''y'' after first substituting ''z'' into

| |

| each of them. Or put another way, ''x'' is applied to ''y'' inside the

| |

| environment ''z''.

| |

| | |

| Given '''S''' and '''K''', '''I''' itself is unnecessary, since it can

| |

| be built from the other two:

| |

| | |

| :(('''S''' '''K''' '''K''') ''x'')

| |

| :: = ('''S''' '''K''' '''K''' ''x'')

| |

| :: = ('''K''' ''x'' ('''K''' ''x''))

| |

| :: = ''x''

| |

| | |

| for any term ''x''. Note that although (('''S''' '''K''' '''K''')

| |

| ''x'') = ('''I''' ''x'') for any ''x'', ('''S''' '''K''' '''K''')

| |

| itself is not equal to '''I'''. We say the terms are [[extensional equality|extensionally equal]]. Extensional equality captures the

| |

| mathematical notion of the equality of functions: that two functions

| |

| are ''equal'' if they always produce the same results for the same

| |

| arguments. In contrast, the terms themselves, together with the

| |

| reduction of primitive combinators, capture the notion of

| |

| ''intensional equality'' of functions: that two functions are ''equal''

| |

| only if they have identical implementations up to the expansion of primitive

| |

| combinators when these ones are applied to enough arguments. There are many ways to

| |

| implement an identity function; ('''S''' '''K''' '''K''') and '''I'''

| |

| are among these ways. ('''S''' '''K''' '''S''') is yet another. We

| |

| will use the word ''equivalent'' to indicate extensional equality,

| |

| reserving ''equal'' for identical combinatorial terms.

| |

| | |

| A more interesting combinator is the [[fixed point combinator]] or '''Y''' combinator, which can be used to implement [[recursion]].

| |

| | |

| === Completeness of the '''S'''-'''K''' basis ===

| |

| | |

| It is perhaps astonishing that '''S''' and '''K''' can be

| |

| composed to produce combinators that are extensionally equal to

| |

| ''any'' lambda term, and therefore, by Church's thesis, to any

| |

| computable function whatsoever. The proof is to present a transformation,

| |

| ''T''[ ], which converts an arbitrary lambda term into an equivalent

| |

| combinator.

| |

| | |

| ''T''[ ] may be defined as follows:

| |

| | |

| # ''T''[''x''] => ''x''

| |

| # ''T''[(''E₁'' ''E₂'')] => (''T''[''E₁''] ''T''[''E₂''])

| |

| # ''T''[''λx''.''E''] => ('''K''' ''T''[''E'']) (if ''x'' does not occur free in ''E'')

| |

| # ''T''[''λx''.''x''] => '''I'''

| |

| # ''T''[''λx''.''λy''.''E''] => ''T''<nowiki>[</nowiki>''λx''.''T''<nowiki>[</nowiki>''λy''.''E''<nowiki>]]</nowiki> (if ''x'' occurs free in ''E'')

| |

| # ''T''[''λx''.(''E₁'' ''E₂'')] => ('''S''' ''T''[''λx''.''E₁''] ''T''[''λx''.''E₂''])

| |

| This process is also known as ''abstraction elimination''.

| |

| | |

| ==== Conversion of a lambda term to an equivalent combinatorial term ====

| |

| | |

| For example, we will convert the lambda term ''λx''.''λy''.(''y'' ''x'') to a

| |

| combinator:

| |

| | |

| :''T''[''λx''.''λy''.(''y'' ''x'')]

| |

| :: = ''T''<nowiki>[</nowiki>''λx''.''T''<nowiki>[</nowiki>''λy''.(''y'' ''x'')<nowiki>]]</nowiki> (by 5)

| |

| :: = ''T''[''λx''.('''S''' ''T''[''λy''.''y''] ''T''[''λy''.''x''])] (by 6)

| |

| :: = ''T''[''λx''.('''S''' '''I''' ''T''[''λy''.''x''])] (by 4)

| |

| :: = ''T''[''λx''.('''S''' '''I''' ('''K''' ''x''))] (by 3 and 1)

| |

| :: = ('''S''' ''T''[''λx''.('''S''' '''I''')] ''T''[''λx''.('''K''' ''x'')]) (by 6)

| |

| :: = ('''S''' ('''K''' ('''S''' '''I''')) ''T''[''λx''.('''K''' ''x'')]) (by 3)

| |

| :: = ('''S''' ('''K''' ('''S''' '''I''')) ('''S''' ''T''[''λx''.'''K'''] ''T''[''λx''.''x''])) (by 6)

| |

| :: = ('''S''' ('''K''' ('''S''' '''I''')) ('''S''' ('''K''' '''K''') ''T''[''λx''.''x''])) (by 3)

| |

| :: = ('''S''' ('''K''' ('''S''' '''I''')) ('''S''' ('''K''' '''K''') '''I''')) (by 4)

| |

| | |

| If we apply this combinator to any two terms ''x'' and ''y'', it

| |

| reduces as follows:

| |

| | |

| : ('''S''' ('''K''' ('''S''' '''I''')) ('''S''' ('''K''' '''K''') '''I''') x y)

| |

| :: = ('''K''' ('''S''' '''I''') x ('''S''' ('''K''' '''K''') '''I''' x) y)

| |

| :: = ('''S''' '''I''' ('''S''' ('''K''' '''K''') '''I''' x) y)

| |

| :: = ('''I''' y ('''S''' ('''K''' '''K''') '''I''' x y))

| |

| :: = (y ('''S''' ('''K''' '''K''') '''I''' x y))

| |

| :: = (y ('''K''' '''K''' x ('''I''' x) y))

| |

| :: = (y ('''K''' ('''I''' x) y))

| |

| :: = (y ('''I''' x))

| |

| :: = (y x)

| |

| | |

| The combinatory representation, ('''S''' ('''K''' ('''S''' '''I''')) ('''S''' ('''K''' '''K''') '''I''')) is much

| |

| longer than the representation as a lambda term, ''λx''.''λy''.(y x). This is typical. In general, the ''T''[ ] construction may expand a lambda

| |

| term of length ''n'' to a combinatorial term of length

| |

| [[Big O notation|Θ]](3<sup>''n''</sup>) {{Citation needed|date=May 2010}}.

| |

| | |

| ==== Explanation of the ''T''[ ] transformation ====

| |

| | |

| The ''T''[ ] transformation is motivated by a desire to eliminate

| |

| abstraction. Two special cases, rules 3 and 4, are trivial: ''λx''.''x'' is

| |

| clearly equivalent to '''I''', and ''λx''.''E'' is clearly equivalent to

| |

| ('''K''' ''T''[''E'']) if ''x'' does not appear free in ''E''.

| |

| | |

| The first two rules are also simple: Variables convert to themselves,

| |

| and applications, which are allowed in combinatory terms, are

| |

| converted to combinators simply by converting the applicand and the

| |

| argument to combinators.

| |

| | |

| It's rules 5 and 6 that are of interest. Rule 5 simply says that to

| |

| convert a complex abstraction to a combinator, we must first convert

| |

| its body to a combinator, and then eliminate the abstraction. Rule 6

| |

| actually eliminates the abstraction.

| |

| | |

| ''λx''.(''E₁'' ''E₂'') is a function which takes an argument, say ''a'', and

| |

| substitutes it into the lambda term (''E₁'' ''E₂'') in place of ''x'',

| |

| yielding (''E₁'' ''E₂'')[''x'' : = ''a'']. But substituting ''a'' into (''E₁'' ''E₂'') in place

| |

| of ''x'' is just the same as substituting it into both ''E₁'' and ''E₂'', so

| |

| | |

| (''E₁'' ''E₂'')[''x'' := ''a''] = (''E₁''[''x'' := ''a''] ''E₂''[''x'' := ''a''])

| |

| | |

| (''λx''.(''E₁'' ''E₂'') ''a'') = ((''λx''.''E₁'' ''a'') (''λx''.''E₂'' ''a''))

| |

| = ('''S''' ''λx''.''E₁'' ''λx''.''E₂'' ''a'')

| |

| = (('''S''' ''λx''.''E₁'' ''λx''.''E₂'') ''a'')

| |

| | |

| By extensional equality,

| |

| | |

| ''λx''.(''E₁'' ''E2'') = ('''S''' ''λx''.''E₁'' ''λx''.''E₂'')

| |

| | |

| Therefore, to find a combinator equivalent to ''λx''.(''E₁'' ''E₂''), it is

| |

| sufficient to find a combinator equivalent to ('''S''' ''λx''.''E₁'' ''λx''.''E₂''), and

| |

| | |

| ('''S''' ''T''[''λx''.''E₁''] ''T''[''λx''.''E₂''])

| |

| | |

| evidently fits the bill. ''E₁'' and ''E₂'' each contain strictly fewer

| |

| applications than (''E₁'' ''E₂''), so the recursion must terminate in a lambda

| |

| term with no applications at all—either a variable, or a term of the

| |

| form ''λx''.''E''.

| |

| | |

| === Simplifications of the transformation ===

| |

| ==== η-reduction ====

| |

| | |

| The combinators generated by the ''T''[ ] transformation can be made

| |

| smaller if we take into account the ''η-reduction'' rule:

| |

| | |

| ''T''[''λx''.(''E'' ''x'')] = ''T''[''E''] (if ''x'' is not free in ''E'')

| |

| | |

| ''λx''.(''E'' x) is the function which takes an argument, ''x'', and

| |

| applies the function ''E'' to it; this is extensionally equal to the

| |

| function ''E'' itself. It is therefore sufficient to convert ''E'' to

| |

| combinatorial form.

| |

| | |

| Taking this simplification into account, the example above becomes:

| |

| | |

| ''T''[''λx''.''λy''.(''y'' ''x'')]

| |

| = ...

| |

| = ('''S''' ('''K''' ('''S''' '''I''')) ''T''[''λx''.('''K''' ''x'')])

| |

| = ('''S''' ('''K''' ('''S''' '''I''')) '''K''') (by η-reduction)

| |

| | |

| This combinator is equivalent to the earlier, longer one:

| |

| | |

| ('''S''' ('''K''' ('''S''' '''I''')) '''K''' ''x'' ''y'')

| |

| = ('''K''' ('''S''' '''I''') ''x'' ('''K''' ''x'') ''y'')

| |

| = ('''S''' '''I''' ('''K''' ''x'') ''y'')

| |

| = ('''I''' ''y'' ('''K''' ''x'' ''y''))

| |

| = (''y'' ('''K''' ''x'' ''y''))

| |

| = (''y'' ''x'')

| |

| | |

| Similarly, the original version of the ''T''[ ] transformation

| |

| transformed the identity function ''λf''.''λx''.(''f'' ''x'') into ('''S''' ('''S''' ('''K''' '''S''') ('''S''' ('''K''' '''K''') '''I''')) ('''K''' '''I''')). With the η-reduction rule, ''λf''.''λx''.(''f'' ''x'') is

| |

| transformed into '''I'''.

| |

| | |

| ==== One-point basis ====

| |

| | |

| There are one-point bases from which every combinator can be composed extensionally equal to ''any'' lambda term. The simplest example of such a basis is {'''X'''} where:

| |

| | |

| '''X''' ≡ ''λx''.((x'''S''')'''K''')

| |

| | |

| It is not difficult to verify that:

| |

| '''X''' ('''X''' ('''X''' '''X''')) =<sup>ηβ</sup> '''K''' and

| |

| '''X''' ('''X''' ('''X''' ('''X''' '''X'''))) =<sup>ηβ</sup> '''S'''.

| |

| | |

| Since {'''K''', '''S'''} is a basis, it follows that {'''X'''} is a basis too. The [[Iota and Jot|Iota]] programming language uses '''X''' as its sole combinator.

| |

| | |

| Another simple example of a one-point basis is:

| |

| | |

| '''X'''' ≡ ''λx''.(x '''K''' '''S''' '''K''') with

| |

| ('''X'''' '''X'''') '''X'''' =<sup>β</sup> '''K''' and

| |

| '''X'''' ('''X'''' '''X'''') =<sup>β</sup> '''S'''

| |

| | |

| '''X' ''' does not need η contraction in order to produce '''K''' and '''S'''.

| |

| | |

| ==== Combinators B, C ====

| |

| | |

| In addition to '''S''' and '''K''', [[Moses Schönfinkel|Schönfinkel]]'s paper included two combinators which are now called '''B''' and '''C''', with the following reductions:

| |

| | |

| ('''C''' ''f'' ''x'' ''y'') = (''f'' ''y'' ''x'')

| |

| ('''B''' ''f'' ''g'' ''x'') = (''f'' (''g'' ''x''))

| |

| | |

| He also explains how they in turn can be expressed using only '''S''' and '''K'''.

| |

| | |

| These combinators are extremely useful when translating predicate logic or lambda calculus into combinator expressions. They were also used by [[Haskell Curry|Curry]], and much later by [[David Turner (computer scientist)|David Turner]], whose name has been associated with their computational use. Using them, we can extend the rules for the transformation as follows:

| |

| | |

| # ''T''[''x''] => ''x''

| |

| # ''T''[(''E₁'' ''E₂'')] => (''T''[''E₁''] ''T''[''E₂''])

| |

| # ''T''[''λx''.''E''] => ('''K''' ''T''[''E'']) (if ''x'' is not free in ''E'')

| |

| # ''T''[''λx''.''x''] => '''I'''

| |

| # ''T''[''λx''.''λy''.''E''] => ''T''<nowiki>[</nowiki>''λx''.''T''<nowiki>[</nowiki>''λy''.''E''<nowiki>]]</nowiki> (if ''x'' is free in ''E'')

| |

| # ''T''[''λx''.(''E₁'' ''E₂'')] => ('''S''' ''T''[''λx''.''E₁''] ''T''[''λx''.''E₂'']) (if ''x'' is free in both ''E₁'' and ''E₂'')

| |

| # ''T''[''λx''.(''E₁'' ''E₂'')] => ('''C''' ''T''[''λx''.''E₁''] ''T''[''E₂'']) (if ''x'' is free in ''E₁'' but not ''E₂'')

| |

| # ''T''[''λx''.(''E₁'' ''E₂'')] => ('''B''' ''T''[''E₁''] ''T''[''λx''.''E₂'']) (if ''x'' is free in ''E₂'' but not ''E₁'')

| |

| | |

| Using '''B''' and '''C''' combinators, the transformation of

| |

| ''λx''.''λy''.(''y'' ''x'') looks like this:

| |

| | |

| ''T''[''λx''.''λy''.(''y'' ''x'')]

| |

| = ''T''<nowiki>[</nowiki>''λx''.''T''<nowiki>[</nowiki>''λy''.(''y'' ''x'')<nowiki>]</nowiki><nowiki>]</nowiki>

| |

| = ''T''[''λx''.('''C''' ''T''[''λy''.''y''] ''x'')] (by rule 7)

| |

| = ''T''[''λx''.('''C''' '''I''' ''x'')]

| |

| = ('''C''' '''I''') (η-reduction)

| |

| = '''C'''<sub>*</sub>(traditional canonical notation : '''X'''<sub>*</sub> = '''X''' '''I''')

| |

| = '''I''''(traditional canonical notation: '''X'''' = '''C''' '''X''')

| |

| | |

| And indeed, ('''C''' '''I''' ''x'' ''y'') does reduce to (''y'' ''x''):

| |

| | |

| ('''C''' '''I''' ''x'' ''y'')

| |

| = ('''I''' ''y'' ''x'')

| |

| = (''y'' ''x'')

| |

| | |

| The motivation here is that '''B''' and '''C''' are limited versions of '''S'''.

| |

| Whereas '''S''' takes a value and substitutes it into both the applicand and

| |

| its argument before performing the application, '''C''' performs the

| |

| substitution only in the applicand, and '''B''' only in the argument.

| |

| | |

| The modern names for the combinators come from [[Haskell Curry]]'s doctoral thesis of 1930 (see [[B,C,K,W System]]). In [[Moses Schönfinkel|Schönfinkel]]'s original paper, what we now call '''S''', '''K''', '''I''', '''B''' and '''C''' were called '''S''', '''C''', '''I''', '''Z''', and '''T''' respectively.

| |

| | |

| The reduction in combinator size that results from the new transformation rules | |

| can also be achieved without introducing '''B''' and '''C''', as demonstrated in Section 3.2 of

| |

| <ref>John Tromp, Binary Lambda Calculus and Combinatory Logic, in ''Randomness And Complexity, from Leibniz To Chaitin'', ed. Cristian S. Calude, World Scientific Publishing Company, October 2008. [http://www.cwi.nl/~tromp/cl/LC.pdf (pdf version)]</ref>.

| |

| | |

| ===== CL<sub>K</sub> versus CL<sub>I</sub> calculus =====

| |

| A distinction must be made between the '''CL'''<sub>K</sub> as described in this article and the '''CL'''<sub>I</sub> calculus. The distinction corresponds to that between the λ<sub>K</sub> and the λ<sub>I</sub> calculus. Unlike the λ<sub>K</sub> calculus, the λ<sub>I</sub> calculus restricts abstractions to:

| |

| ::''λx''.''E'' where ''x'' has at least one free occurrence in ''E''.

| |

| As a consequence, combinator '''K''' is not present in the λ<sub>I</sub> calculus nor in the '''CL'''<sub>I</sub> calculus. The constants of '''CL'''<sub>I</sub> are: '''I''', '''B''', '''C''' and '''S''', which form a basis from which all '''CL'''<sub>I</sub> terms can be composed (modulo equality). Every λ<sub>I</sub> term can be converted into an equal '''CL'''<sub>I</sub> combinator according to rules similar to those presented above for the conversion of λ<sub>K</sub> terms into '''CL'''<sub>K</sub> combinators. See chapter 9 in Barendregt (1984).

| |

| | |

| === Reverse conversion ===

| |

| | |

| The conversion ''L''[ ] from combinatorial terms to lambda terms is

| |

| trivial:

| |

| | |

| ''L''['''I'''] = ''λx''.''x''

| |

| ''L''['''K'''] = ''λx''.''λy''.''x''

| |

| ''L''['''C'''] = ''λx''.''λy''.''λz''.(''x'' ''z'' ''y'')

| |

| ''L''['''B'''] = ''λx''.''λy''.''λz''.(''x'' (''y'' ''z''))

| |

| ''L''['''S'''] = ''λx''.''λy''.''λz''.(''x'' ''z'' (''y'' ''z''))

| |

| ''L''[(''E₁'' ''E₂'')] = (''L''[''E₁''] ''L''[''E₂''])

| |

| | |

| Note, however, that this transformation is not the inverse

| |

| transformation of any of the versions of ''T''[ ] that we have seen.

| |

| | |

| == Undecidability of combinatorial calculus ==

| |

| | |

| A [[normal form (abstract rewriting)|normal form]] is any combinatory term in which the primitive combinators that occur, if any, are not applied to enough arguments to be simplified. It is undecidable whether a general combinatory term has a normal form; whether two combinatory terms are equivalent, etc. This is equivalent to the undecidability of the corresponding problems for lambda terms. However, a direct proof is as follows:

| |

| | |

| First, observe that the term

| |

| | |

| '''Ω''' = ('''S''' '''I''' '''I''' ('''S''' '''I''' '''I'''))

| |

| | |

| has no normal form, because it reduces to itself after three steps, as

| |

| follows:

| |

| | |

| ('''S''' '''I''' '''I''' ('''S''' '''I''' '''I'''))

| |

| = ('''I''' ('''S''' '''I''' '''I''') ('''I''' ('''S''' '''I''' '''I''')))

| |

| = ('''S''' '''I''' '''I''' ('''I''' ('''S''' '''I''' '''I''')))

| |

| = ('''S''' '''I''' '''I''' ('''S''' '''I''' '''I'''))

| |

| | |

| and clearly no other reduction order can make the expression shorter.

| |

| | |

| Now, suppose '''N''' were a combinator for detecting normal forms,

| |

| such that

| |

| | |

| ('''N''' ''x'') => '''T''', if ''x'' has a normal form

| |

| '''F''', otherwise.

| |

| | |

| (Where '''T''' and '''F''' represent the conventional [[Church encoding]]s of true and false, ''λx''.''λy''.''x'' and ''λx''.''λy''.''y'', transformed into combinatory logic. The combinatory versions have '''T''' = '''K''' and '''F''' = ('''K''' '''I''').)

| |

| | |

| Now let

| |

| | |

| ''Z'' = ('''C''' ('''C''' ('''B''' '''N''' ('''S''' '''I''' '''I''')) '''Ω''') '''I''')

| |

| | |

| now consider the term ('''S''' '''I''' '''I''' ''Z''). Does ('''S''' '''I''' '''I''' ''Z'') have a normal

| |

| form? It does if and only if the following do also:

| |

| | |

| ('''S''' '''I''' '''I''' ''Z'')

| |

| = ('''I''' ''Z'' ('''I''' ''Z''))

| |

| = (''Z'' ('''I''' ''Z''))

| |

| = (''Z'' ''Z'')

| |

| = ('''C''' ('''C''' ('''B''' '''N''' ('''S''' '''I''' '''I''')) '''Ω''') '''I''' ''Z'') (definition of ''Z'')

| |

| = ('''C''' ('''B''' '''N''' ('''S''' '''I''' '''I''')) '''Ω''' ''Z'' '''I''')

| |

| = ('''B''' '''N''' ('''S''' '''I''' '''I''') ''Z'' '''Ω''' '''I''')

| |

| = ('''N''' ('''S''' '''I''' '''I''' ''Z'') '''Ω''' '''I''')

| |

| | |

| Now we need to apply '''N''' to ('''S''' '''I''' '''I''' ''Z'').

| |

| Either ('''S''' '''I''' '''I''' ''Z'') has a normal form, or it does not. If it ''does''

| |

| have a normal form, then the foregoing reduces as follows:

| |

| | |

| ('''N''' ('''S''' '''I''' '''I''' ''Z'') '''Ω''' '''I''')

| |

| = ('''K''' '''Ω''' '''I''') (definition of '''N''')

| |

| = '''Ω'''

| |

| | |

| but '''Ω''' does ''not'' have a normal form, so we have a contradiction. But

| |

| if ('''S''' '''I''' '''I''' ''Z'') does ''not'' have a normal form, the foregoing reduces as

| |

| follows:

| |

| | |

| ('''N''' ('''S''' '''I''' '''I''' ''Z'') '''Ω''' '''I''')

| |

| = ('''K''' '''I''' '''Ω''' '''I''') (definition of '''N''')

| |

| = ('''I''' '''I''')

| |

| '''I'''

| |

| | |

| which means that the normal form of ('''S''' '''I''' '''I''' ''Z'') is simply '''I''', another

| |

| contradiction. Therefore, the hypothetical normal-form combinator '''N'''

| |

| cannot exist.

| |

| | |

| The combinatory logic analogue of [[Rice's theorem]] says that there is no complete nontrivial predicate. A ''predicate'' is a combinator that, when applied, returns either '''T''' or '''F'''. A predicate ''N'' is ''nontrivial'' if there are two arguments ''A'' and ''B'' such that ''NA''='''T''' and ''NB''='''F'''. A combinator ''N'' is ''complete'' if and only if ''NM'' has a normal form for every argument ''M''. The analogue of Rice's theorem then says that every complete predicate is trivial. The proof of this theorem is rather simple.

| |

| | |

| '''Proof:''' By reductio ad absurdum. Suppose there is a complete non trivial predicate, say ''N''.<br />

| |

| Because ''N'' is supposed to be non trivial there are combinators ''A'' and ''B'' such that<br />

| |

| (''N A'') = '''T''' and<br />

| |

| (''N B'') = '''F'''.

| |

| | |

| Define NEGATION ≡ ''λx.''(if (''N x'') then ''B'' else ''A'') ≡ ''λx.''((''N x'') ''B'' ''A'')<br />

| |

| Define ABSURDUM ≡ ('''Y''' NEGATION)

| |

| | |

| Fixed point theorem gives: ABSURDUM = (NEGATION ABSURDUM), for<br />

| |

| ABSURDUM ≡ ('''Y''' NEGATION) = (NEGATION ('''Y''' NEGATION)) ≡ (NEGATION ABSURDUM).

| |

| | |

| Because ''N'' is supposed to be complete either:

| |

| # (''N'' ABSURDUM) = '''F''' or

| |

| # (''N'' ABSURDUM) = '''T'''

| |

| | |

| Case 1: '''F''' = (''N'' ABSURDUM) = ''N'' (NEGATION ABSURDUM) = (''N A'') = '''T''', a contradiction.<br />

| |

| Case 2: '''T''' = (''N'' ABSURDUM) = ''N'' (NEGATION ABSURDUM) = (''N B'') = '''F''', again a contradiction.

| |

| | |

| Hence (''N'' ABSURDUM) is neither '''T''' nor '''F''', which contradicts the presupposition that ''N'' would be a complete non trivial predicate. '''QED'''.

| |

| | |

| From this undecidability theorem it immediately follows that there is no complete predicate that can discriminate between terms that have a normal form and terms that do not have a normal form. It also follows that there is '''no''' complete predicate, say EQUAL, such that:<br />

| |

| (EQUAL ''A B'') = '''T''' if ''A'' = ''B'' and<br />

| |

| (EQUAL ''A B'') = '''F''' if ''A'' ≠ ''B''.<br />

| |

| If EQUAL would exist, then for all ''A'', ''λx.''(EQUAL ''x A'') would have to be a complete non trivial predicate.

| |

| | |

| == Applications ==

| |

| === Compilation of functional languages ===

| |

| | |

| [[Functional programming language]]s are often based on the simple but

| |

| universal semantics of the [[lambda calculus]].

| |

| | |

| David Turner used his combinators to implement the [[SASL programming language]].

| |

| | |

| [[Kenneth E. Iverson]] used primitives based on Curry's combinators in his [[J programming language]], a successor to [[APL (programming language)|APL]]. This enabled what Iverson called [[tacit programming]], that is, programming in functional expressions containing no variables, along with powerful tools for working with such programs. It turns out that tacit programming is possible in a clumsier manner in any APL-like language with user-defined operators ([http://portal.acm.org/citation.cfm?id=114065&dl=GUIDE&coll=GUIDE Pure Functions in APL and J]).

| |

| <!--

| |

| (Discuss strict vs. [[lazy evaluation]] semantics. Note implications of

| |

| graph reduction implementation for lazy evaluation. Point out

| |

| efficiency problem in lazy semantics: Repeated evaluation of the same

| |

| expression, in, e.g. (square COMPLICATED) => (* COMPLICATED

| |

| COMPLICATED), normally avoided by eager evaluation and call-by-value.

| |

| Discuss benefit of graph reduction in this case: when (square

| |

| COMPLICATED) is evaluated, the representation of COMPLICATED can be

| |

| shared by both branches of the resulting graph for (* COMPLICATED

| |

| COMPLICATED), and evaluated only once.)

| |

| -->

| |

| <!-- Work in [[combinator library]] somehow. -->

| |

| | |

| === Logic ===

| |

| The [[Curry–Howard isomorphism]] implies a connection between logic and programming: every proof of a theorem of [[intuitionistic logic]] corresponds to a reduction of a typed lambda term, and conversely. Moreover, theorems can be identified with function type signatures. Specifically, a typed combinatory logic corresponds to a [[Hilbert-style deduction system|Hilbert system]] in [[proof theory]].

| |

| | |

| The '''K''' and '''S''' combinators correspond to the axioms

| |

| :'''AK''': ''A'' → (''B'' → ''A''),

| |

| :'''AS''': (''A'' → (''B'' → ''C'')) → ((''A'' → ''B'') → (''A'' → ''C'')),

| |

| and function application corresponds to the detachment (modus ponens) rule

| |

| :'''MP''': from ''A'' and ''A'' → ''B'' infer ''B''.

| |

| The calculus consisting of '''AK''', '''AS''', and '''MP''' is complete for the implicational fragment of the intuitionistic logic, which can be seen as follows. Consider the set ''W'' of all deductively closed sets of formulas, ordered by [[inclusion (set theory)|inclusion]]. Then <math>\langle W,\subseteq\rangle</math> is an intuitionistic [[Kripke semantics|Kripke frame]], and we define a model <math>\Vdash</math> in this frame by

| |

| :<math>X\Vdash A\iff A\in X.</math>

| |

| This definition obeys the conditions on satisfaction of →: on one hand, if <math>X\Vdash A\to B</math>, and <math>Y\in W</math> is such that <math>Y\supseteq X</math> and <math>Y\Vdash A</math>, then <math>Y\Vdash B</math> by modus ponens. On the other hand, if <math>X\not\Vdash A\to B</math>, then <math>X,A\not\vdash B</math> by the [[deduction theorem]], thus the deductive closure of <math>X\cup\{A\}</math> is an element <math>Y\in W</math> such that <math>Y\supseteq X</math>, <math>Y\Vdash A</math>, and <math>Y\not\Vdash B</math>.

| |

| | |

| Let ''A'' be any formula which is not provable in the calculus. Then ''A'' does not belong to the deductive closure ''X'' of the empty set, thus <math>X\not\Vdash A</math>, and ''A'' is not intuitionistically valid.

| |

|

| |

|

| ==See also== | | ==See also== |

| * [[SKI combinator calculus]] | | * [[Numerical analysis]] |

| * [[B,C,K,W system]] | | * [[CORDIC]] |

| * [[Fixed point combinator]] | | * [[Exact trigonometric constants]] |

| * [[graph reduction machine]] | | * [[Aryabhata's sine table]] |

| * [[supercombinator]]s

| | * [[Madhava's sine table]] |

| * [[Lambda calculus]] and [[Cylindric algebra]], other approaches to modelling quantification and eliminating variables

| |

| * ''[[To Mock a Mockingbird]]''

| |

| * [[combinatory categorial grammar]]

| |

| * [[Categorical abstract machine]]

| |

| * [[Applicative computing systems]]

| |

| | |

| == References ==

| |

| {{reflist}}

| |

| | |

| ==Further reading==

| |

| *[[Hendrik Pieter Barendregt]], 1984. ''The Lambda Calculus, Its Syntax and Semantics''. Studies in Logic and the Foundations of Mathematics, Volume 103, North-Holland. ISBN 0-444-87508-5 | |

| *{{cite book

| |

| | last1 = Curry | first1 = Haskell B.

| |

| | authorlink1 = Haskell Curry

| |

| | last2 = Feys | first2 = Robert

| |

| | authorlink2 = Robert Feys

| |

| | title = Combinatory Logic

| |

| | volume = Vol. I

| |

| | year = 1958

| |

| | publisher = North Holland

| |

| | location = Amsterdam

| |

| | isbn = 0-7204-2208-6

| |

| }}

| |

| *{{cite book

| |

| | last1 = Curry | first1 = Haskell B.

| |

| | first2 = J. Roger | last2 = Hindley

| |

| | first3 = Jonathan P. | last3 = Seldin

| |

| | authorlink1 = Haskell Curry

| |

| | authorlink2 = J. Roger Hindley

| |

| | authorlink3 = Jonathan P. Seldin

| |

| | title = Combinatory Logic

| |

| | volume = Vol. II

| |

| | year = 1972

| |

| | publisher = North Holland

| |

| | location = Amsterdam

| |

| | isbn = 0-7204-2208-6

| |

| }}

| |

| * Field, Anthony J. and Peter G. Harrison, 1998. ''Functional Programming''. . Addison-Wesley. ISBN 0-201-19249-7

| |

| *{{cite

| |

| | last1 = Hindley | first1 = J. Roger

| |

| | last2 = Meredith | first2 = David

| |

| | authorlink1 = J. Roger Hindley

| |

| | authorlink2 = David Meredith

| |

| | title = Principal type-schemes and condensed detachment

| |

| | url = http://projecteuclid.org/euclid.jsl/1183743187

| |

| | id = {{MR|1043546}}

| |

| | journal = [[Journal of Symbolic Logic]]

| |

| | volume = 55

| |

| | issue = 1

| |

| | pages = 90–105

| |

| | year = 1990

| |

| }}

| |

| * Hindley, J. R., and Seldin, J. P. (2008) ''[http://www.cambridge.org/catalogue/catalogue.asp?isbn=9780521898850 λ-calculus and Combinators: An Introduction]''. Cambridge Univ. Press.

| |

| * Paulson, Lawrence C., 1995. ''[http://www.cl.cam.ac.uk/Teaching/Lectures/founds-fp/Founds-FP.ps.gz Foundations of Functional Programming.]'' University of Cambridge.

| |

| *<span id="Quine 1960">[[Willard Van Orman Quine|Quine, W. V.]], 1960 "Variables explained away", ''Proceedings of the American Philosophical Society'' '''104''':3:343–347 (June 15, 1960) [http://links.jstor.org/sici?sici=0003-049X%2819600615%29104%3A3%3C343%3AVEA%3E2.0.CO%3B2-W at JSTOR]. Reprinted as Chapter 23 of Quine's ''Selected Logic Papers'' (1966), pp. 227–235</span>

| |

| * [[Moses Schönfinkel]], 1924, "Über die Bausteine der mathematischen Logik," translated as "On the Building Blocks of Mathematical Logic" in ''From Frege to Gödel: a source book in mathematical logic, 1879–1931'', [[Jean van Heijenoort]], ed. Harvard University Press, 1967. ISBN 0-674-32449-8. The article that founded combinatory logic.

| |

| *Sørensen, Morten Heine B. and Paweł Urzyczyn, 1999. ''[http://folli.loria.fr/cds/1999/library/pdf/curry-howard.pdf Lectures on the Curry–Howard Isomorphism.]'' University of Copenhagen and University of Warsaw, 1999.

| |

| * [[Raymond Smullyan|Smullyan, Raymond]], 1985. ''[[To Mock a Mockingbird]]''. Knopf. ISBN 0-394-53491-3. A gentle introduction to combinatory logic, presented as a series of recreational puzzles using bird watching metaphors.

| |

| *--------, 1994. ''Diagonalization and Self-Reference''. Oxford Univ. Press. Chpts. 17-20 are a more formal introduction to combinatory logic, with a special emphasis on fixed point results.

| |

| * Wolfengagen, V.E. ''[http://vew.0catch.com/books/Wolfengagen_CLP-2003-En.djvu Combinatory logic in programming.] Computations with objects through examples and exercises''. -- 2-nd ed. -- M.: "Center JurInfoR" Ltd., 2003. -- x+337 с. ISBN 5-89158-101-9.

| |

|

| |

|

| ==External links== | | ==References== |

| *[[Stanford Encyclopedia of Philosophy]]: "[http://plato.stanford.edu/entries/logic-combinatory/ Combinatory Logic]" by Katalin Bimbó. | | * Carl B. Boyer, ''A History of Mathematics'', 2nd ed. (Wiley, New York, 1991). |

| *[http://www.sadl.uleth.ca/gsdl/cgi-bin/library?a=p&p=about&c=curry 1920–1931 Curry's block notes.] | | * Manfred Tasche and Hansmartin Zeuner, "Improved roundoff error analysis for precomputed twiddle factors," ''J. Computational Analysis and Applications'' '''4''' (1), 1–18 (2002). |

| *Keenan, David C. (2001) "[http://dkeenan.com/Lambda/index.htm To Dissect a Mockingbird: A Graphical Notation for the Lambda Calculus with Animated Reduction.]" | | * James C. Schatzman, "Accuracy of the discrete Fourier transform and the fast Fourier transform," ''SIAM J. Sci. Comput.'' '''17''' (5), 1150–1166 (1996). |

| *Rathman, Chris, "[http://www.angelfire.com/tx4/cus/combinator/birds.html Combinator Birds.]" A table distilling much of the essence of Smullyan (1985).

| | * Vitit Kantabutra, "On hardware for computing exponential and trigonometric functions," ''IEEE Trans. Computers'' '''45''' (3), 328–339 (1996). |

| *[http://cstein.kings.cam.ac.uk/~chris/combinators.html Drag 'n' Drop Combinators.] (Java Applet) | | * R. P. Brent, "[http://doi.acm.org/10.1145/321941.321944 Fast Multiple-Precision Evaluation of Elementary Functions]", ''J. ACM'' '''23''', 242–251 (1976). |

| *[http://www.cwi.nl/~tromp/cl/LC.pdf Binary Lambda Calculus and Combinatory Logic.] | | * Singleton, Richard C. (1967). On computing the fast Fourier transform. ''Comm. ACM'', vol. 10, 647–654. |

| *[http://code.google.com/p/clache Combinatory logic reduction web server]

| | * Gal, Shmuel and Bachelis, Boris. An accurate elementary mathematical library for the IEEE floating point standard, ACM Transaction on Mathematical Software (1991). |

|

| |

|

| [[Category:Lambda calculus]] | | [[Category:Numerical analysis]] |

| [[Category:Logic in computer science]] | | [[Category:Trigonometry]] |

| [[Category:Combinatory logic|*]]

| |

|

| |

|

| [[ca:Lògica combinatòria]] | | [[ar:جداول مثلثية]] |

| [[de:Kombinatorische Logik]] | | [[ca:Construcció de les taules trigonomètriques]] |

| [[es:Lógica combinatoria]]

| | [[fr:Construction des tables trigonométriques]] |

| [[fr:Logique combinatoire]] | | [[it:Tavola trigonometrica]] |

| [[hr:Kombinatorna logika]]

| | [[sv:Trigonometrisk tabell]] |

| [[nl:Combinatorische logica]]

| |

| [[ja:コンビネータ論理]]

| |

| [[pl:Rachunek kombinatorów]]

| |

| [[pt:Lógica combinatória]]

| |

| [[ru:Комбинаторная логика]]

| |

| [[sh:Kombinatorna logika]] | |

| [[zh:组合子逻辑]] | |