Recursive Bayesian estimation: Difference between revisions

en>Nehalem fix broken link |

|||

| Line 1: | Line 1: | ||

The | {{more footnotes|date=January 2010}} | ||

{{Regression bar}} | |||

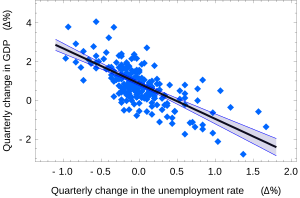

[[Image:Okuns law quarterly differences.svg|300px|thumb|[[Okun's law]] in [[macroeconomics]] is an example of the simple linear regression. Here the dependent variable (GDP growth) is presumed to be in a linear relationship with the changes in the unemployment rate.]] | |||

In [[statistics]], '''simple linear regression''' is the [[ordinary least squares|least squares]] estimator of a [[linear regression]] model with a single [[covariate|explanatory variable]]. In other words, simple linear regression fits a straight line through the set of ''n'' points in such a way that makes the sum of squared ''[[errors and residuals in statistics|residuals]]'' of the model (that is, vertical distances between the points of the data set and the fitted line) as small as possible. | |||

The adjective ''simple'' refers to the fact that this regression is one of the simplest in statistics. The slope of the fitted line is equal to the [[Pearson product moment correlation coefficient|correlation]] between ''y'' and ''x'' corrected by the ratio of standard deviations of these variables. The intercept of the fitted line is such that it passes through the center of mass (<span style="text-decoration:overline">''x''</span>, <span style="text-decoration:overline">''y''</span>) of the data points. | |||

Other regression methods besides the simple [[ordinary least squares]] (OLS) also exist (see [[linear regression model]]). In particular, when one wants to do regression by eye, people usually tend to draw a slightly steeper line, closer to the one produced by the [[Deming regression|total least squares]] method. This occurs because it is more natural for one's mind to consider the orthogonal distances from the observations to the regression line, rather than the vertical ones as OLS method does. | |||

==Fitting the regression line== | |||

Suppose there are ''n'' data points {''y''<sub>''i''</sub>, ''x''<sub>''i''</sub>}, where ''i'' = 1, 2, …, ''n''. The goal is to find the equation of the straight line | |||

: <math> y = \alpha + \beta x, \,</math> | |||

which would provide a <!-- maybe indefinite article here will aggravate some of the grammar purists, but it attempts to convey the idea that there could be many different ways to define "best" fit --> "best" fit for the data points. Here the "best" will be understood as in the [[Ordinary least squares|least-squares]] approach: such a line that minimizes the sum of squared residuals of the linear regression model. In other words, numbers ''α'' (the y-intercept) and ''β'' (the slope) solve the following minimization problem: | |||

: <math>\text{Find }\min_{\alpha,\,\beta}Q(\alpha,\beta),\text{ where } Q(\alpha,\beta) = \sum_{i=1}^n\hat{\varepsilon}_i^{\,2} = \sum_{i=1}^n (y_i - \alpha - \beta x_i)^2\ </math> | |||

By using either [[calculus]], the geometry of [[inner product space]]s or simply expanding to get a quadratic in ''α'' and ''β'', it can be shown that the values of ''α'' and ''β'' that minimize the objective function ''Q''<ref>Kenney, J. F. and Keeping, E. S. (1962) "Linear Regression and Correlation." Ch. 15 in ''Mathematics of Statistics'', Pt. 1, 3rd ed. Princeton, NJ: Van Nostrand, pp. 252-285</ref> are | |||

: <math>\begin{align} | |||

\hat\beta & = \frac{ \sum_{i=1}^{n} (x_{i}-\bar{x})(y_{i}-\bar{y}) }{ \sum_{i=1}^{n} (x_{i}-\bar{x})^2 } | |||

= \frac{ \sum_{i=1}^{n}{x_{i}y_{i}} - \frac1n \sum_{i=1}^{n}{x_{i}}\sum_{j=1}^{n}{y_{j}}}{ \sum_{i=1}^{n}({x_{i}^2}) - \frac1n (\sum_{i=1}^{n}{x_{i}})^2 } \\[6pt] | |||

& = \frac{ \overline{xy} - \bar{x}\bar{y} }{ \overline{x^2} - \bar{x}^2 } | |||

= \frac{ \operatorname{Cov}[x,y] }{ \operatorname{Var}[x] } | |||

= r_{xy} \frac{s_y}{s_x}, \\[6pt] | |||

\hat\alpha & = \bar{y} - \hat\beta\,\bar{x}, | |||

\end{align}</math> | |||

where <math>r_{xy}</math> is the [[Correlation#Sample_correlation|sample correlation coefficient]] between <math>x</math> and <math>y</math>, <math>s_{x}</math> is the [[standard deviation]] of <math>x</math>, and <math>s_{y}</math> is correspondingly the standard deviation of <math>y</math>. A horizontal bar over a quantity indicates the sample-average of that quantity. For example: <math>\overline{xy} = \tfrac{1}{n}\textstyle\sum_{i=1}^n x_iy_i\ .</math> | |||

Substituting the above expressions for <math>\hat\alpha</math> and <math>\hat\beta</math> into | |||

: <math> y = \hat\alpha + \hat\beta x, \,</math> | |||

yields | |||

: <math>\frac{ y-\bar{y}}{s_y} = r_{xy} \frac{ x-\bar{x}}{s_x} </math> | |||

This shows the role <math>r_{xy}</math> plays in the regression line of standardized data points. It is sometimes useful to calculate <math>r_{xy}</math> from the data independently using this equation: | |||

: <math>r_{xy} = \frac{ \overline{xy} - \bar{x}\bar{y} }{ \sqrt{ (\overline{x^2} - \bar{x}^2) (\overline{y^2} - \bar{y}^2 )} } </math> | |||

The [[Coefficient of determination|coefficient of determination (R squared)]] is equal to <math>r_{xy}^2</math> when the model is linear with a single independent variable. See [[Correlation#Sample_correlation|sample correlation coefficient]] for additional details. | |||

===Linear regression without the intercept term=== | |||

Sometimes, people consider a simple linear regression model without the intercept term, <math>y = \beta x</math>. In such a case, the OLS estimator for <math>\beta</math> simplifies to | |||

: <math>\hat\beta = (\overline{x y}) / (\overline{x^2})</math> | |||

and the sample correlation coefficient becomes | |||

: <math>r_{xy} = \frac{ \overline{xy} }{ \sqrt{ (\overline{x^2}) (\overline{y^2}) } } </math> | |||

==Numerical properties== | |||

# The line goes through the "center of mass" point (<i style="text-decoration:overline">x</i>, <i style="text-decoration:overline">y</i>). | |||

# The sum of the residuals is equal to zero, if the model includes a constant: <math>\textstyle\sum_{i=1}^n\hat\varepsilon_i=0.</math> | |||

# The linear combination of the residuals, in which the coefficients are the ''x''-values, is equal to zero: <math>\textstyle\sum_{i=1}^nx_i\hat\varepsilon_i=0.</math> | |||

==Model-cased properties== | |||

Description of the statistical properties of estimators from the simple linear regession estimates requires the use of a [[statistical model]]. The following is based on assuming the validity of a model under which the estimates are optimal. It is also possible to evaluate the properties under other assumptions, such as [[Homoscedasticity|inhomogeneity]], but this is discussed elsewhere. | |||

===Unbiasedness=== | |||

The estimators <math>\hat\alpha</math> and <math>\hat\beta</math> are [[Estimator bias|unbiased]]. This requires that we interpret the estimators as random variables and so we have to assume that, for each value of ''x'', the corresponding value of ''y'' is generated as a mean response ''α + βx'' plus an additional random variable ''ε'' called the ''error term''. This error term has to be equal to zero on average, for each value of ''x''. Under such interpretation, the least-squares estimators <math>\hat\alpha</math> and <math>\hat\beta</math> will themselves be random variables, and they will unbiasedly estimate the "true values" ''α'' and ''β''. | |||

===Confidence intervals=== | |||

The formulas given in the previous section allow one to calculate the ''point estimates'' of ''α'' and ''β'' — that is, the coefficients of the regression line for the given set of data. However, those formulas don't tell us how precise the estimates are, i.e., how much the estimators <math>\hat\alpha</math> and <math>\hat\beta</math> vary from sample to sample for the specified sample size. So-called ''confidence intervals'' were devised to give a plausible set of values the estimates might have if one repeated the experiment a very large number of times. | |||

The standard method of constructing confidence intervals for linear regression coefficients relies on the normality assumption, which is justified if either 1)the errors in the regression are [[normal distribution|normally distributed]] (the so-called ''classic regression'' assumption), or 2) the number of observations ''n'' is sufficiently large, in which case the estimator is approximately normally distributed. The latter case is justified by the [[central limit theorem]]. | |||

===Normality assumption=== | |||

Under the first assumption above, that of the normality of the error terms, the estimator of the slope coefficient will itself be normally distributed with mean ''β'' and variance <math style="height:1.5em">\sigma^2/\sum(x_i-\bar{x})^2,</math> where <math>\sigma^2</math> is the variance of the error terms (see [[Proofs involving ordinary least squares]]). At the same time the sum of squared residuals ''Q'' is distributed proportionally to [[chi-squared distribution|''χ''<sup>2</sup>]] with (''n''−2) degrees of freedom, and independently from <math style="height:1.5em">\hat\beta.</math> This allows us to construct a ''t''-statistic | |||

: <math>t = \frac{\hat\beta - \beta}{s_{\hat\beta}}\ \sim\ t_{n-2},</math> where <math> s_\hat{\beta} = \sqrt{ \frac{\tfrac{1}{n-2}\sum_{i=1}^n \hat{\varepsilon}_i^{\,2}} {\sum_{i=1}^n (x_i -\bar{x})^2} }</math> | |||

which has a [[Student's t-distribution|Student's ''t'']]-distribution with (''n''−2) degrees of freedom. Here ''s''<sub>''β''</sub> is the ''standard error'' of the estimator <math style="height:1.5em">\hat\beta.</math> | |||

Using this ''t''-statistic we can construct a confidence interval for ''β'': | |||

: <math> \beta \in \Big[\ \hat\beta - s_{\hat\beta} t^*_{n-2},\ \hat\beta + s_{\hat\beta} t^*_{n-2}\ \Big] </math> at confidence level (1−''γ''), | |||

where <math>t^*_{n-2}</math> is the (1−''γ''/2)-th quantile of the ''t''<sub>''n''–2</sub> distribution. For example, if ''γ'' = 0.05 then the confidence level is 95%. | |||

Similarly, the confidence interval for the intercept coefficient ''α'' is given by | |||

: <math> \alpha \in \Big[\ \hat\alpha - s_{\hat\alpha} t^*_{n-2},\ \hat\alpha + s_{\hat\alpha} t^*_{n-2}\ \Big] </math> at confidence level (1−''γ''), | |||

where | |||

: <math> s_{\hat\alpha} = s_{\hat\beta}\sqrt{\tfrac{1}{n}\textstyle\sum_{i=1}^n x_i^2} | |||

= \sqrt{\tfrac{1}{n(n-2)}\left(\textstyle\sum_{j=1}^n \hat{\varepsilon}_j^{\,2} \right) | |||

\frac{\sum_{i=1}^n x_i^2} {\sum_{i=1}^n (x_i -\bar{x})^2} } | |||

</math> | |||

[[Image:Okuns law with confidence bands.svg|thumb|300px|The US "changes in unemployment – GDP growth" regression with the 95% confidence bands.]] | |||

The confidence intervals for ''α'' and ''β'' give us the general idea where these regression coefficients are most likely to be. For example in the "Okun's law" regression shown at the beginning of the article the point estimates are <math style="height:0.9em">\hat\alpha=0.859</math> and <math style="height:1.5em">\hat\beta=-1.817.</math> The 95% confidence intervals for these estimates are | |||

: <math>\alpha\in\big[\,0.76,\,0.96\,\big], \quad \beta\in\big[\,{-2.06},\,{-1.58}\,\big]</math> with 95% confidence. | |||

In order to represent this information graphically, in the form of the confidence bands around the regression line, one has to proceed carefully and account for the joint distribution of the estimators. It can be shown{{citation needed|date=July 2012}} that at confidence level (1−''γ'') the confidence band has hyperbolic form given by the equation | |||

: <math> | |||

\hat{y}|_{x=\xi} \in \Bigg[ | |||

\hat\alpha + \hat\beta \xi \pm | |||

t^*_{n-2} \sqrt{ \textstyle\frac{1}{n-2} \sum\hat{\varepsilon}_i^{\,2} \cdot | |||

\Big(\frac{1}{n} + \frac{(\xi-\bar{x})^2}{\sum(x_i-\bar{x})^2}\Big) | |||

} | |||

\Bigg] .</math> | |||

===Asymptotic assumption=== | |||

The alternative second assumption states that when the number of points in the dataset is "large enough", the [[law of large numbers]] and the [[central limit theorem]] become applicable, and then the distribution of the estimators is approximately normal. Under this assumption all formulas derived in the previous section remain valid, with the only exception that the quantile ''t*''<sub style="position:relative;left:-0.4em">''n''−2</sub> of [[Student's t-distribution|Student's ''t'']] distribution is replaced with the quantile ''q*'' of the [[standard normal distribution]]. Occasionally the fraction {{Frac|(''n''−2)}} is replaced with {{Frac|''n''}}. When ''n'' is large such change does not alter the results appreciably. | |||

==Numerical example== | |||

This example concerns the data set from the [[Ordinary least squares]] article. This data set gives average weights for humans as a function of their height in the population of American women of age 30–39. Although the [[Ordinary least squares|OLS]] article argues that it would be more appropriate to run a quadratic regression for this data, the simple linear regression model is applied here instead. | |||

:{|class="wikitable" | |||

|- style="text-align:right;" | |||

! ''x<sub>i</sub>'' | |||

| 1.47 || 1.50 || 1.52 || 1.55 || 1.57 || 1.60 || 1.63 || 1.65 || 1.68 || 1.70 || 1.73 || 1.75 || 1.78 || 1.80 || 1.83 | |||

! style="text-align:left;" | Height (m) | |||

|- style="text-align:right;" | |||

! ''y<sub>i</sub>'' | |||

|52.21 ||53.12 ||54.48 ||55.84 ||57.20 ||58.57 ||59.93 ||61.29 ||63.11 ||64.47 ||66.28 ||68.10 ||69.92 ||72.19 ||74.46 | |||

! style="text-align:left;" | Mass (kg) | |||

|} | |||

There are ''n'' = 15 points in this data set. Hand calculations would be started by finding the following five sums: | |||

: <math>\begin{align} | |||

& S_x = \sum x_i = 24.76,\quad S_y = \sum y_i = 931.17 \\ | |||

& S_{xx} = \sum x_i^2 = 41.0532, \quad S_{xy} = \sum x_iy_i = 1548.2453, \quad S_{yy} = \sum y_i^2 = 58498.5439 | |||

\end{align}</math> | |||

These quantities would be used to calculate the estimates of the regression coefficients, and their standard errors. | |||

: <math>\begin{align} | |||

& \hat\beta = \frac{nS_{xy}-S_xS_y}{nS_{xx}-S_x^2} = 61.272 \\ | |||

& \hat\alpha = \tfrac{1}{n}S_y - \hat\beta \tfrac{1}{n}S_x = -39.062 \\ | |||

& s_\varepsilon^2 = \tfrac{1}{n(n-2)} \big( nS_{yy}-S_y^2 - \hat\beta^2(nS_{xx}-S_x^2) \big) = 0.5762 \\ | |||

& s_\beta^2 = \frac{n s_\varepsilon^2}{nS_{xx} - S_x^2} = 3.1539 \\ | |||

& s_\alpha^2 = s_\beta^2 \tfrac{1}{n} S_{xx} = 8.63185 | |||

\end{align}</math> | |||

The 0.975 quantile of Student's ''t''-distribution with 13 degrees of freedom is t<sup>*</sup><sub style="position:relative;left:-.3em">13</sub> = 2.1604, and thus the 95% confidence intervals for ''α'' and ''β'' are | |||

: <math>\begin{align} | |||

& \alpha \in [\,\hat\alpha \mp t^*_{13} s_\alpha \,] = [\,{-45.4},\ {-32.7}\,] \\ | |||

& \beta \in [\,\hat\beta \mp t^*_{13} s_\beta \,] = [\, 57.4,\ 65.1 \,] | |||

\end{align}</math> | |||

The [[Pearson product-moment correlation coefficient|product-moment correlation coefficient]] might also be calculated: | |||

: <math> | |||

\hat{r} = \frac{nS_{xy} - S_xS_y}{\sqrt{(nS_{xx}-S_x^2)(nS_{yy}-S_y^2)}} = 0.9945 | |||

</math> | |||

This example also demonstrates that sophisticated calculations will not overcome the use of badly prepared data. The heights were originally given in inches, and have been converted to the nearest centimetre. Since the conversion factor is one inch to 2.54 cm, this is ''not'' a correct conversion. The original inches can be recovered by Round(x/0.0254) and then re-converted to metric: if this is done, the results become | |||

: <math>\begin{align} | |||

& \hat\beta = 61.6746 \\ | |||

& \hat\alpha = -39.7468 \\ | |||

\end{align}</math> | |||

Thus a seemingly small variation in the data has a real effect. | |||

==See also== | |||

* [[Deming regression]] — simple linear regression with errors measured non-vertically | |||

* [[Segmented regression|Linear segmented regression]] | |||

* [[Proofs involving ordinary least squares]] — derivation of all formulas used in this article in general multidimensional case | |||

==References== | |||

{{Reflist}} | |||

==External links== | |||

* [http://mathworld.wolfram.com/LeastSquaresFitting.html Wolfram MathWorld's explanation of Least Squares Fitting, and how to calculate it] | |||

{{statistics}} | |||

{{DEFAULTSORT:Simple Linear Regression}} | |||

[[Category:Regression analysis]] | |||

[[Category:Estimation theory]] | |||

[[Category:Parametric statistics]] | |||

Revision as of 12:20, 27 January 2014

Template:More footnotes Template:Regression bar

In statistics, simple linear regression is the least squares estimator of a linear regression model with a single explanatory variable. In other words, simple linear regression fits a straight line through the set of n points in such a way that makes the sum of squared residuals of the model (that is, vertical distances between the points of the data set and the fitted line) as small as possible.

The adjective simple refers to the fact that this regression is one of the simplest in statistics. The slope of the fitted line is equal to the correlation between y and x corrected by the ratio of standard deviations of these variables. The intercept of the fitted line is such that it passes through the center of mass (x, y) of the data points.

Other regression methods besides the simple ordinary least squares (OLS) also exist (see linear regression model). In particular, when one wants to do regression by eye, people usually tend to draw a slightly steeper line, closer to the one produced by the total least squares method. This occurs because it is more natural for one's mind to consider the orthogonal distances from the observations to the regression line, rather than the vertical ones as OLS method does.

Fitting the regression line

Suppose there are n data points {yi, xi}, where i = 1, 2, …, n. The goal is to find the equation of the straight line

which would provide a "best" fit for the data points. Here the "best" will be understood as in the least-squares approach: such a line that minimizes the sum of squared residuals of the linear regression model. In other words, numbers α (the y-intercept) and β (the slope) solve the following minimization problem:

By using either calculus, the geometry of inner product spaces or simply expanding to get a quadratic in α and β, it can be shown that the values of α and β that minimize the objective function Q[1] are

where is the sample correlation coefficient between and , is the standard deviation of , and is correspondingly the standard deviation of . A horizontal bar over a quantity indicates the sample-average of that quantity. For example:

Substituting the above expressions for and into

yields

This shows the role plays in the regression line of standardized data points. It is sometimes useful to calculate from the data independently using this equation:

The coefficient of determination (R squared) is equal to when the model is linear with a single independent variable. See sample correlation coefficient for additional details.

Linear regression without the intercept term

Sometimes, people consider a simple linear regression model without the intercept term, . In such a case, the OLS estimator for simplifies to

and the sample correlation coefficient becomes

Numerical properties

- The line goes through the "center of mass" point (x, y).

- The sum of the residuals is equal to zero, if the model includes a constant:

- The linear combination of the residuals, in which the coefficients are the x-values, is equal to zero:

Model-cased properties

Description of the statistical properties of estimators from the simple linear regession estimates requires the use of a statistical model. The following is based on assuming the validity of a model under which the estimates are optimal. It is also possible to evaluate the properties under other assumptions, such as inhomogeneity, but this is discussed elsewhere.

Unbiasedness

The estimators and are unbiased. This requires that we interpret the estimators as random variables and so we have to assume that, for each value of x, the corresponding value of y is generated as a mean response α + βx plus an additional random variable ε called the error term. This error term has to be equal to zero on average, for each value of x. Under such interpretation, the least-squares estimators and will themselves be random variables, and they will unbiasedly estimate the "true values" α and β.

Confidence intervals

The formulas given in the previous section allow one to calculate the point estimates of α and β — that is, the coefficients of the regression line for the given set of data. However, those formulas don't tell us how precise the estimates are, i.e., how much the estimators and vary from sample to sample for the specified sample size. So-called confidence intervals were devised to give a plausible set of values the estimates might have if one repeated the experiment a very large number of times.

The standard method of constructing confidence intervals for linear regression coefficients relies on the normality assumption, which is justified if either 1)the errors in the regression are normally distributed (the so-called classic regression assumption), or 2) the number of observations n is sufficiently large, in which case the estimator is approximately normally distributed. The latter case is justified by the central limit theorem.

Normality assumption

Under the first assumption above, that of the normality of the error terms, the estimator of the slope coefficient will itself be normally distributed with mean β and variance where is the variance of the error terms (see Proofs involving ordinary least squares). At the same time the sum of squared residuals Q is distributed proportionally to χ2 with (n−2) degrees of freedom, and independently from This allows us to construct a t-statistic

which has a Student's t-distribution with (n−2) degrees of freedom. Here sβ is the standard error of the estimator

Using this t-statistic we can construct a confidence interval for β:

where is the (1−γ/2)-th quantile of the tn–2 distribution. For example, if γ = 0.05 then the confidence level is 95%.

Similarly, the confidence interval for the intercept coefficient α is given by

where

The confidence intervals for α and β give us the general idea where these regression coefficients are most likely to be. For example in the "Okun's law" regression shown at the beginning of the article the point estimates are and The 95% confidence intervals for these estimates are

In order to represent this information graphically, in the form of the confidence bands around the regression line, one has to proceed carefully and account for the joint distribution of the estimators. It can be shownPotter or Ceramic Artist Truman Bedell from Rexton, has interests which include ceramics, best property developers in singapore developers in singapore and scrabble. Was especially enthused after visiting Alejandro de Humboldt National Park. that at confidence level (1−γ) the confidence band has hyperbolic form given by the equation

Asymptotic assumption

The alternative second assumption states that when the number of points in the dataset is "large enough", the law of large numbers and the central limit theorem become applicable, and then the distribution of the estimators is approximately normal. Under this assumption all formulas derived in the previous section remain valid, with the only exception that the quantile t*n−2 of Student's t distribution is replaced with the quantile q* of the standard normal distribution. Occasionally the fraction Template:Frac is replaced with Template:Frac. When n is large such change does not alter the results appreciably.

Numerical example

This example concerns the data set from the Ordinary least squares article. This data set gives average weights for humans as a function of their height in the population of American women of age 30–39. Although the OLS article argues that it would be more appropriate to run a quadratic regression for this data, the simple linear regression model is applied here instead.

xi 1.47 1.50 1.52 1.55 1.57 1.60 1.63 1.65 1.68 1.70 1.73 1.75 1.78 1.80 1.83 Height (m) yi 52.21 53.12 54.48 55.84 57.20 58.57 59.93 61.29 63.11 64.47 66.28 68.10 69.92 72.19 74.46 Mass (kg)

There are n = 15 points in this data set. Hand calculations would be started by finding the following five sums:

These quantities would be used to calculate the estimates of the regression coefficients, and their standard errors.

The 0.975 quantile of Student's t-distribution with 13 degrees of freedom is t*13 = 2.1604, and thus the 95% confidence intervals for α and β are

The product-moment correlation coefficient might also be calculated:

This example also demonstrates that sophisticated calculations will not overcome the use of badly prepared data. The heights were originally given in inches, and have been converted to the nearest centimetre. Since the conversion factor is one inch to 2.54 cm, this is not a correct conversion. The original inches can be recovered by Round(x/0.0254) and then re-converted to metric: if this is done, the results become

Thus a seemingly small variation in the data has a real effect.

See also

- Deming regression — simple linear regression with errors measured non-vertically

- Linear segmented regression

- Proofs involving ordinary least squares — derivation of all formulas used in this article in general multidimensional case

References

43 year old Petroleum Engineer Harry from Deep River, usually spends time with hobbies and interests like renting movies, property developers in singapore new condominium and vehicle racing. Constantly enjoys going to destinations like Camino Real de Tierra Adentro.

External links

- ↑ Kenney, J. F. and Keeping, E. S. (1962) "Linear Regression and Correlation." Ch. 15 in Mathematics of Statistics, Pt. 1, 3rd ed. Princeton, NJ: Van Nostrand, pp. 252-285

![{\begin{aligned}{\hat \beta }&={\frac {\sum _{{i=1}}^{{n}}(x_{{i}}-{\bar {x}})(y_{{i}}-{\bar {y}})}{\sum _{{i=1}}^{{n}}(x_{{i}}-{\bar {x}})^{2}}}={\frac {\sum _{{i=1}}^{{n}}{x_{{i}}y_{{i}}}-{\frac 1n}\sum _{{i=1}}^{{n}}{x_{{i}}}\sum _{{j=1}}^{{n}}{y_{{j}}}}{\sum _{{i=1}}^{{n}}({x_{{i}}^{2}})-{\frac 1n}(\sum _{{i=1}}^{{n}}{x_{{i}}})^{2}}}\\[6pt]&={\frac {{\overline {xy}}-{\bar {x}}{\bar {y}}}{{\overline {x^{2}}}-{\bar {x}}^{2}}}={\frac {\operatorname {Cov}[x,y]}{\operatorname {Var}[x]}}=r_{{xy}}{\frac {s_{y}}{s_{x}}},\\[6pt]{\hat \alpha }&={\bar {y}}-{\hat \beta }\,{\bar {x}},\end{aligned}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/4ad46a64ee5066ae094f3751422e139f2b680506)

![\beta \in {\Big [}\ {\hat \beta }-s_{{{\hat \beta }}}t_{{n-2}}^{*},\ {\hat \beta }+s_{{{\hat \beta }}}t_{{n-2}}^{*}\ {\Big ]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c196355a44ba5e4c71657ffd2bf0dec26fdffcc8)

![\alpha \in {\Big [}\ {\hat \alpha }-s_{{{\hat \alpha }}}t_{{n-2}}^{*},\ {\hat \alpha }+s_{{{\hat \alpha }}}t_{{n-2}}^{*}\ {\Big ]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3c8c0f6cfb5973467f06781a8ae41e4c83097269)

![\alpha \in {\big [}\,0.76,\,0.96\,{\big ]},\quad \beta \in {\big [}\,{-2.06},\,{-1.58}\,{\big ]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7dd5bd7100c83f5a0fe7d5bcb8923ef423e183e4)

![{\hat {y}}|_{{x=\xi }}\in {\Bigg [}{\hat \alpha }+{\hat \beta }\xi \pm t_{{n-2}}^{*}{\sqrt {\textstyle {\frac {1}{n-2}}\sum {\hat {\varepsilon }}_{i}^{{\,2}}\cdot {\Big (}{\frac {1}{n}}+{\frac {(\xi -{\bar {x}})^{2}}{\sum (x_{i}-{\bar {x}})^{2}}}{\Big )}}}{\Bigg ]}.](https://wikimedia.org/api/rest_v1/media/math/render/svg/bf4ebc9df400e9d9202de1aaec6ab5d46ce2bc0f)

![{\displaystyle {\begin{aligned}&\alpha \in [\,{\hat {\alpha }}\mp t_{13}^{*}s_{\alpha }\,]=[\,{-45.4},\ {-32.7}\,]\\&\beta \in [\,{\hat {\beta }}\mp t_{13}^{*}s_{\beta }\,]=[\,57.4,\ 65.1\,]\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/651efe87c2bd4e59c10285c6e12412c43abe7e01)